Deep Learning Introduction

Deep Learning is a broader family of machine learning methods based on artificial neural networks.

AI Hardware

- Google TPU Cloud

- GPU Server

- GPU Workstation

- GPU PC

|

|

- Embedded Systems

AI chips

Top 10 Gamechanger of AI Chips Industry to Know in 2022

- IBM released its “neuromorphic chip” TrueNorth AI in 2014. TrueNorth includes 5 four billion transistors, 1 million neurons, and 256 million synapses.

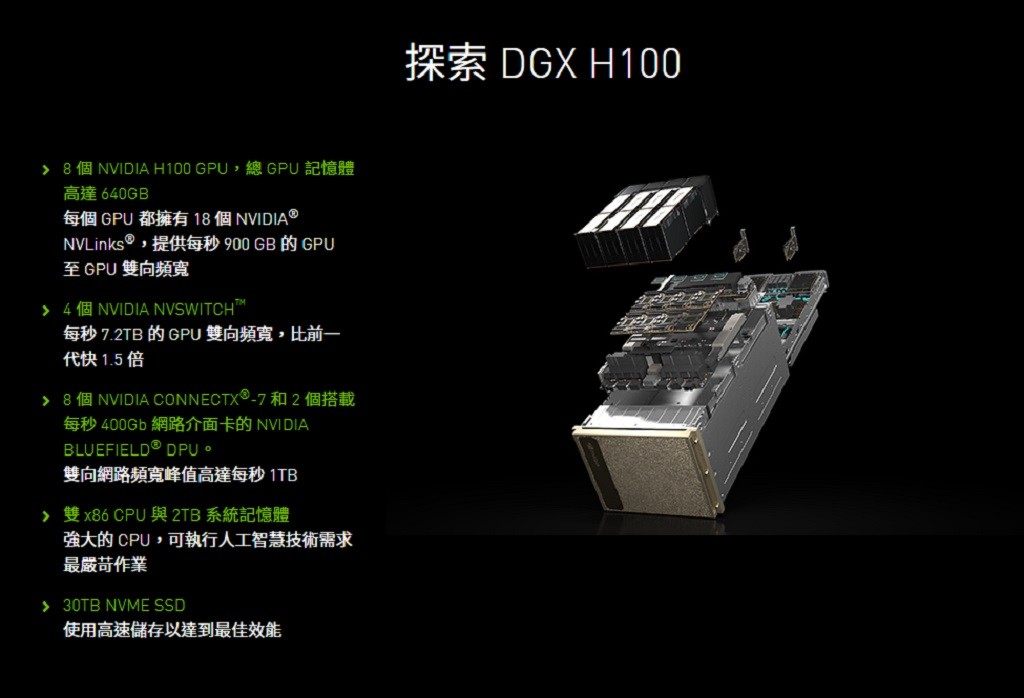

“What we’ve done with the Telum chip is we’ve completely re-architected how these caches work to keep a lot more data a lot closer to the processor core than we have done in the past,” said Christian Jacobi, IBM Fellow and chief technology officer of system architecture and design for IBM zSystems. “To do this, we’ve quadrupled the level two cache. We now have a 32-MB level-two cache.” - Nvidia H100 features fourth-generation Tensor Cores and the Transformer Engine with FP8 precision that provides up to 9X faster training over the prior generation for mixture-of-experts (MoE) models. The combination of fourth-generation NVlink, which offers 900 gigabytes per second (GB/s) of GPU-to-GPU interconnect

- Intel Gaudi2, designed by Intel’s Israel-based Habana Labs, is twice as fast as its first-generation predecessor.

- Google Cloud TPU is the purpose-constructed device gaining knowledge of accelerator chip that powers Google merchandise like Translate, Photos, Search, Assistant, and Gmail.

- Advanced Micro Devices (AMD) gives hardware and software program answers including EPYC CPUs and Radeon Instinct GPUs for device studying and deep studying

leading AI chip startups

- Cerebras Systems WSE-2, which has 850,000 cores and 2.6 trillion transistors

- SambaNova Systems has advanced the SN10 processor chip and raised greater than $1.1 billion in funding. SambaNova Systems builds information facilities and rentals them to the businesses.

- Graphcore is a British agency based in 2016. The agency introduced its flagship AI chip as IPU-POD256. Graphcore has already been funded with around seven hundred million.

- Groq has been based through former Google employees. The startup has already raised around $350 million and produced its first fashions consisting of GroqChip Processor, GroqCard Accelerator, etc.

Tesla D1 chip

Enter Dojo: Tesla Reveals Design for Modular Supercomputer & D1 Chip

|

|

each training tile will provide 565 teraflops and each cabinet (containing 12 tiles) will provide 6.78 petaflops -

meaning that one ExaPOD alone will deliver a maximum theoretical performance of 67.8 FP32 petaflops.

Tesla details Dojo supercomputer, reveals Dojo D1 chip and training tile module

|

|

Google TPU

- TPU System Architecture

- Cloud TPU VM architectures

- Ref. Hardware for Deep Learning Part4: ASIC

- TPU v4

- TPU v3 Block Diagram

- TPU Block Diagram

Nvida GPUs

- V100 (DataCenter GPU)

- A100 (DataCenter GPU)

-

H100:

- DXG-H100 (Workstation)

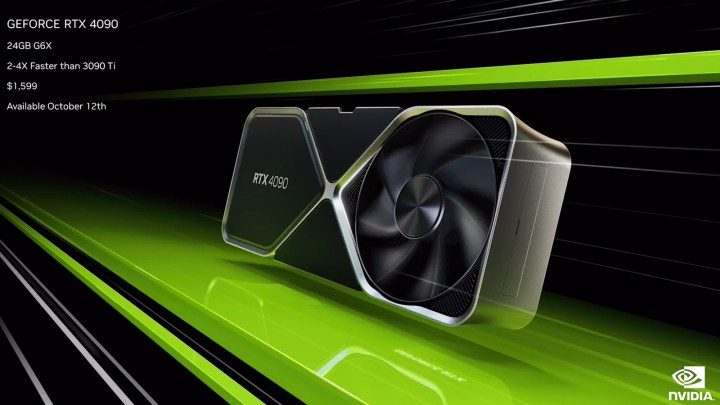

- RTX4090 (Graphics Card)

NVIDIA Hopper Architecture In-Depth

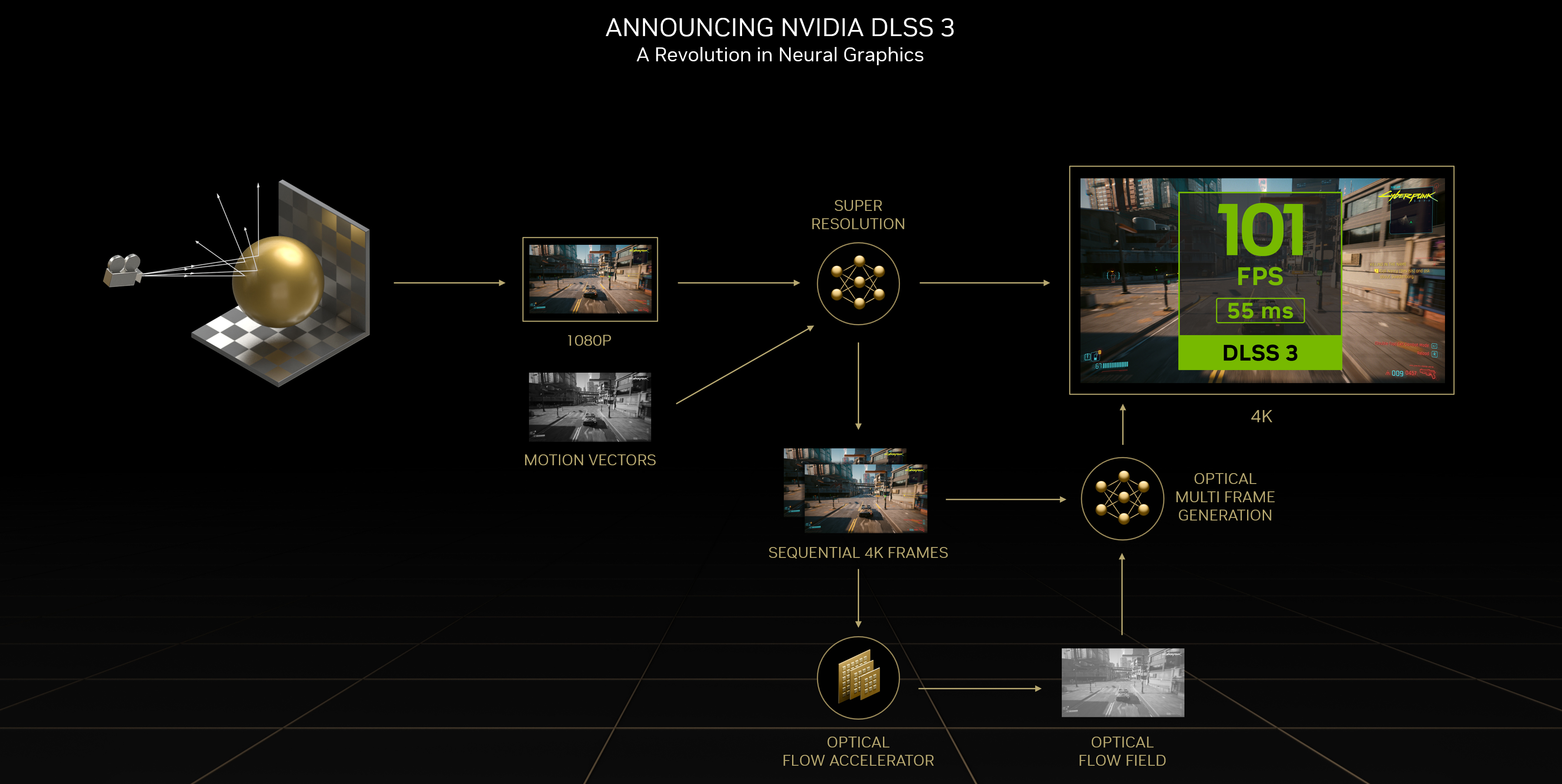

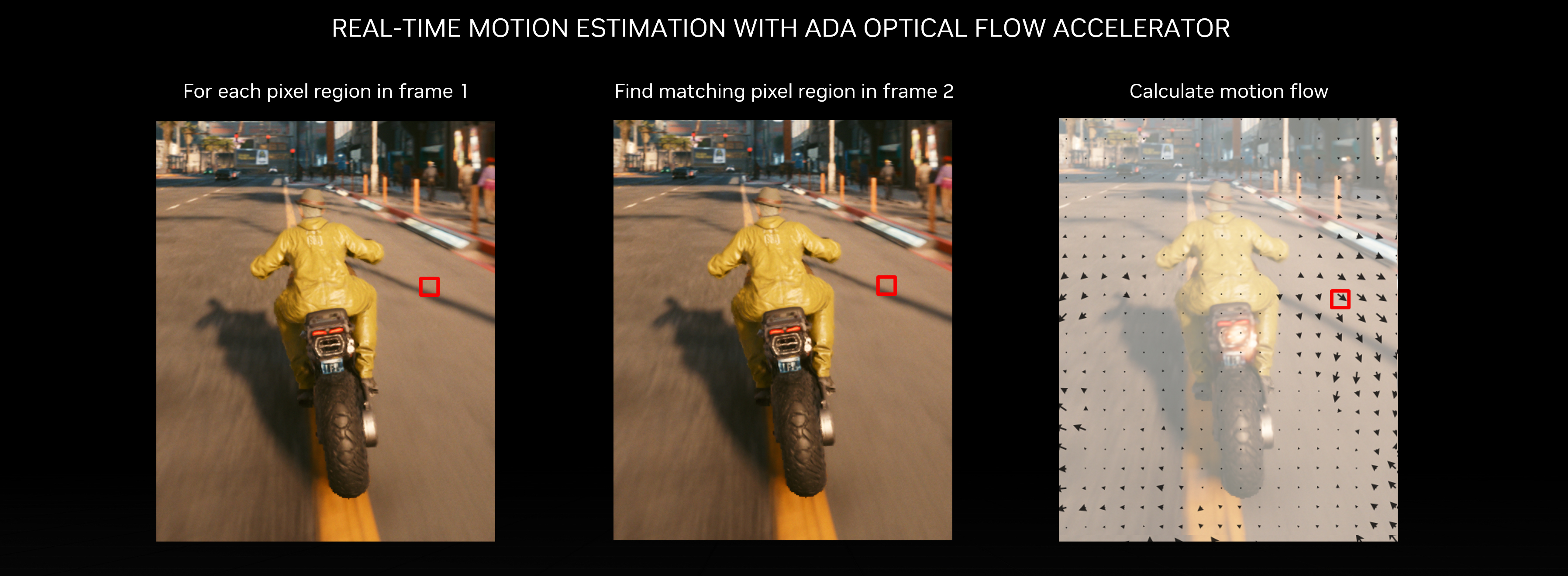

NVIDIA DLSS3

NVIDIA DLSS revolutionized graphics by using AI super resolution and Tensor Cores on GeForce RTX GPUs to boost frame rates while delivering crisp, high quality images that rival native resolution.

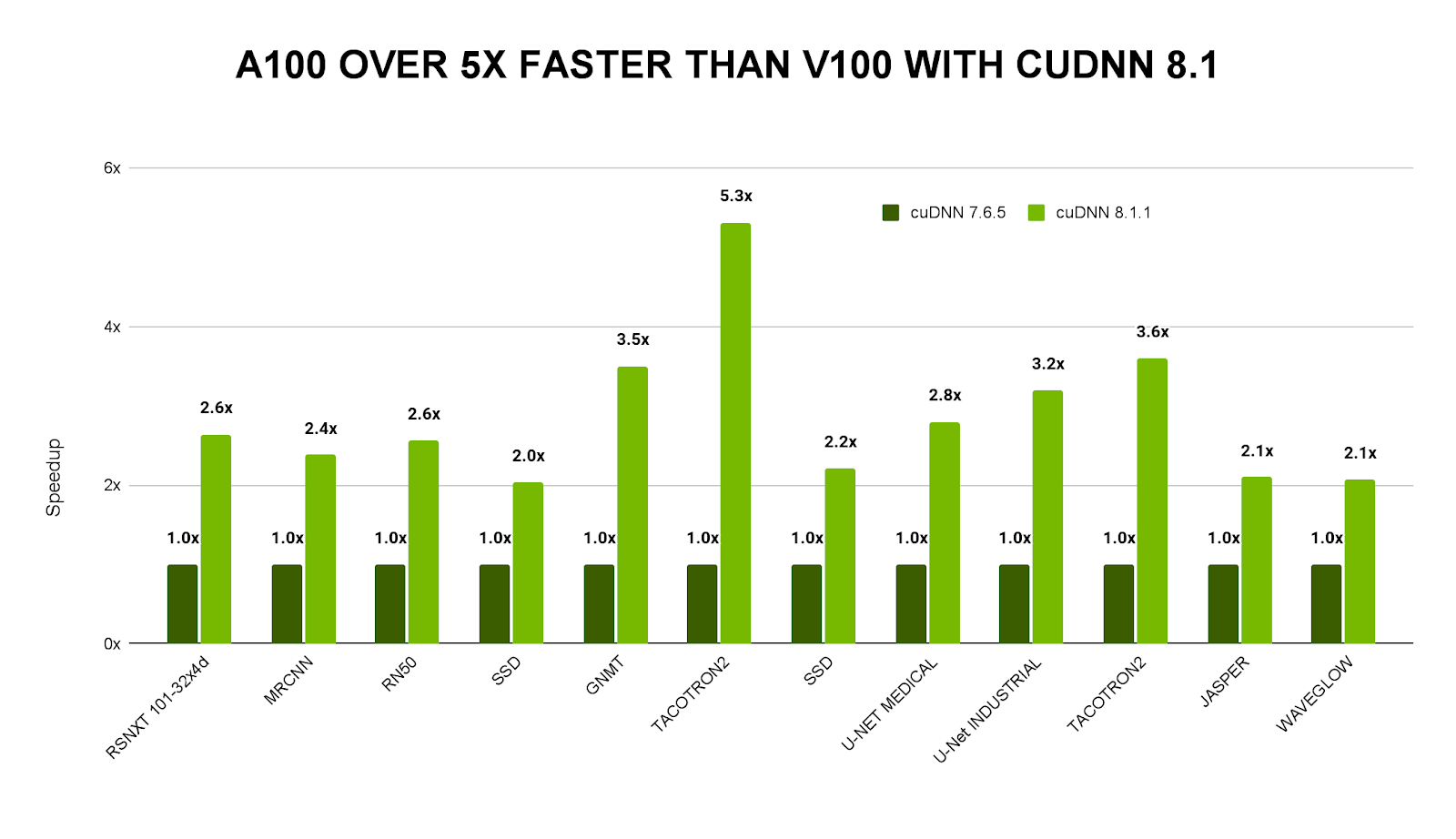

CUDA & CuDNN

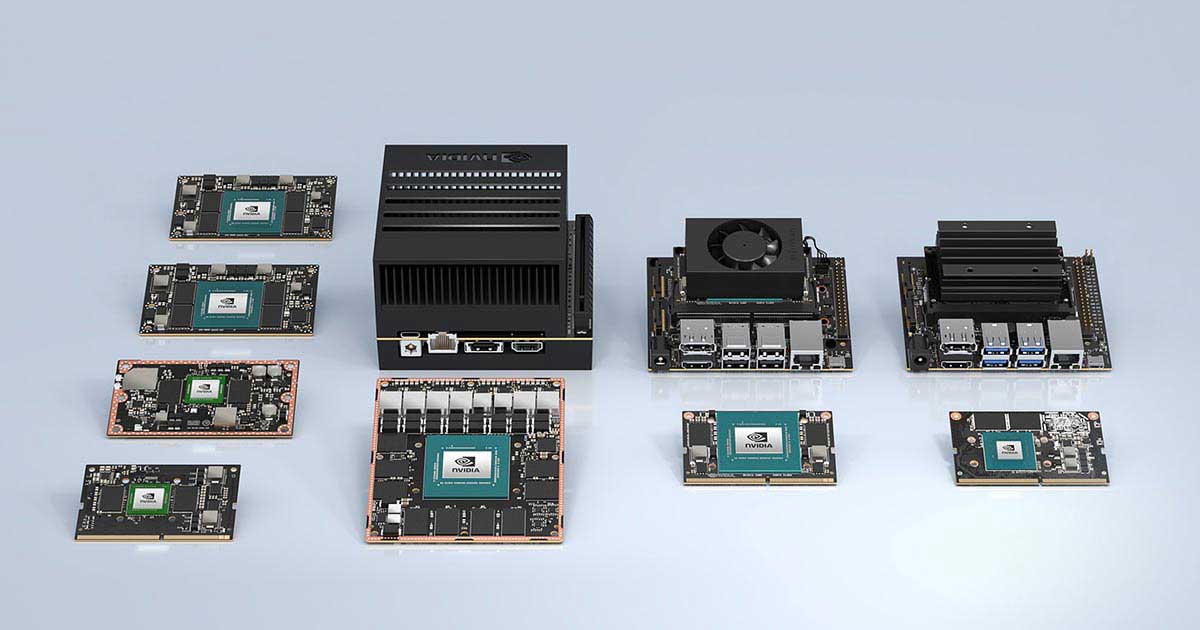

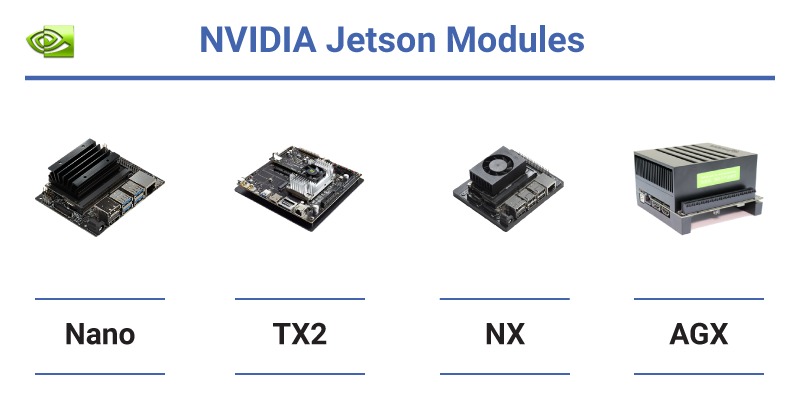

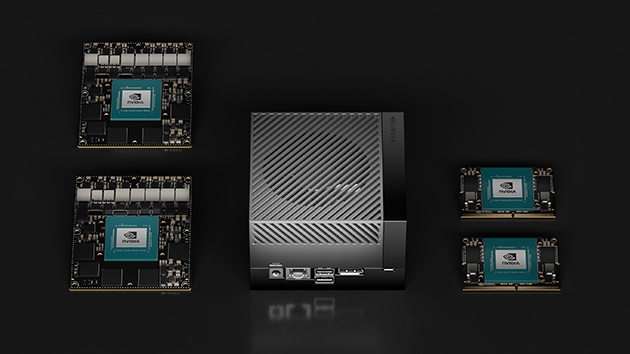

Nivida Jetson

- Jetson AGX Orin 32GB module 275 TOPS

- Jetson Benchmarks

- Getting the Best Performance on MLPerf Inference 2.0

Jetson Orin Nano

It has up to eight streaming multiprocessors (SMs) composed of 1024 CUDA cores and up to 32 Tensor Cores for AI processing.

Kneron 耐能智慧

- KL530 AI SoC

- 基於ARM Cortex M4 CPU内核的低功耗性能和高能效設計。

- 算力達1 TOPS INT 4,在同等硬件條件下比INT 8的處理效率提升高達70%。

- 支持CNN,Transformer,RNN Hybrid等多種AI模型。

- 智能ISP可基於AI優化圖像質量,強力Codec實現高效率多媒體壓縮。

- 冷啟動時間低於500ms,平均功耗低於500mW。

- KL720 AI SoC (算力可達0.9 TOPS/W)

- 基於ARM Cortex M4 CPU内核的低功耗性能和高能效設計

- 可適配高端IP攝像頭,智能電視,AI眼鏡、耳機以及AIoT網絡的終端設備。

- 可處理高達4K圖像,全高清影音和3D感應,實現精準的臉部識別以及手勢控制。

- 可為翻譯機和AI助手等產品提供自然語言處理。

- 以上各種功能以及其它邊緣AI — 例如感熱 — 均可實時處理。

Realtek AmebaPro2

- MCU

- Part Number: RTL8735B

- 32-bit Arm v8M, up to 500MHz

- MEMORY

- 768KB ROM

- 512KB RAM

- Supports MCM embedded DDR2/DDR3L memory up to 128MB

- External Flash up to 64MB

- KEY FEATURES

- Integrated 802.11 a/b/g/n Wi-Fi, 2.4GHz/5GHz

- Bluetooth Low Energy (BLE) 4.2

- Integrated Intelligent Engine @ 0.4 TOPS

- Ethernet Interface

- USB Host/Device

- SD Host

- ISP

- Audio Codec

- H.264/H.265

- Secure Boot

- Crypto Engine

- OTHER FEATURES

- 2 SPI interfaces

- 1 I2C interface

- 8 PWM interfaces

- 3 UART interfaces

- 3 ADC interfaces

- 2 GDMA interfaces

- Max 23 GPIO

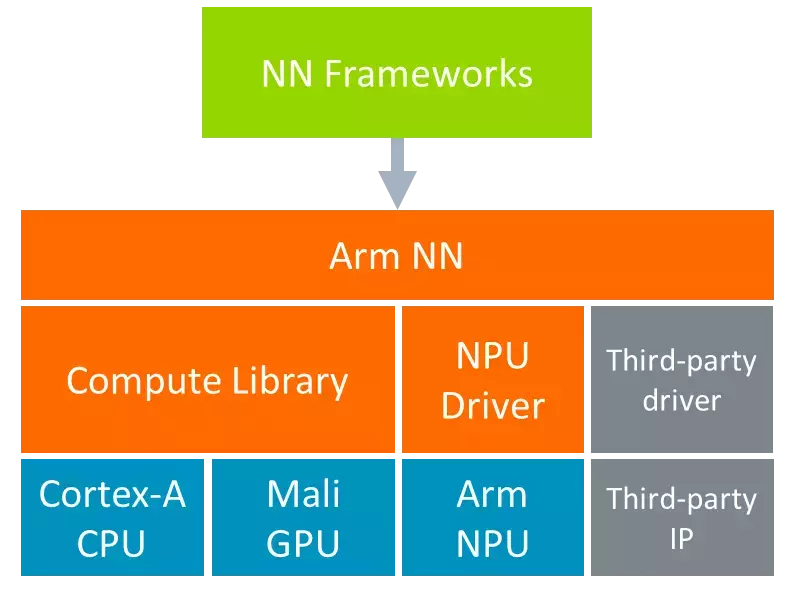

mlplatform.org

The machine learning platform is part of the Linaro Artificial Intelligence Initiative and is the home for Arm NN and Compute Library – open-source software libraries that optimise the execution of machine learning (ML) workloads on Arm-based processors.

| Project | Repository |

| Arm NN | [https://github.com/ARM-software/armnn](https://github.com/ARM-software/armnn) |

| Compute Library | [https://review.mlplatform.org/#/admin/projects/ml/ComputeLibrary](https://review.mlplatform.org/#/admin/projects/ml/ComputeLibrary) |

| Arm Android NN Driver | https://github.com/ARM-software/android-nn-driver |

ARM NN SDK

免費提供的 Arm NN (類神經網路) SDK,是一組開放原始碼的 Linux 軟體工具,可在節能裝置上實現機器學習工作負載。這項推論引擎可做為橋樑,連接現有神經網路框架與節能的 Arm Cortex-A CPU、Arm Mali 繪圖處理器及 Ethos NPU。

ARM NN

Arm NN is the most performant machine learning (ML) inference engine for Android and Linux, accelerating ML on Arm Cortex-A CPUs and Arm Mali GPUs.

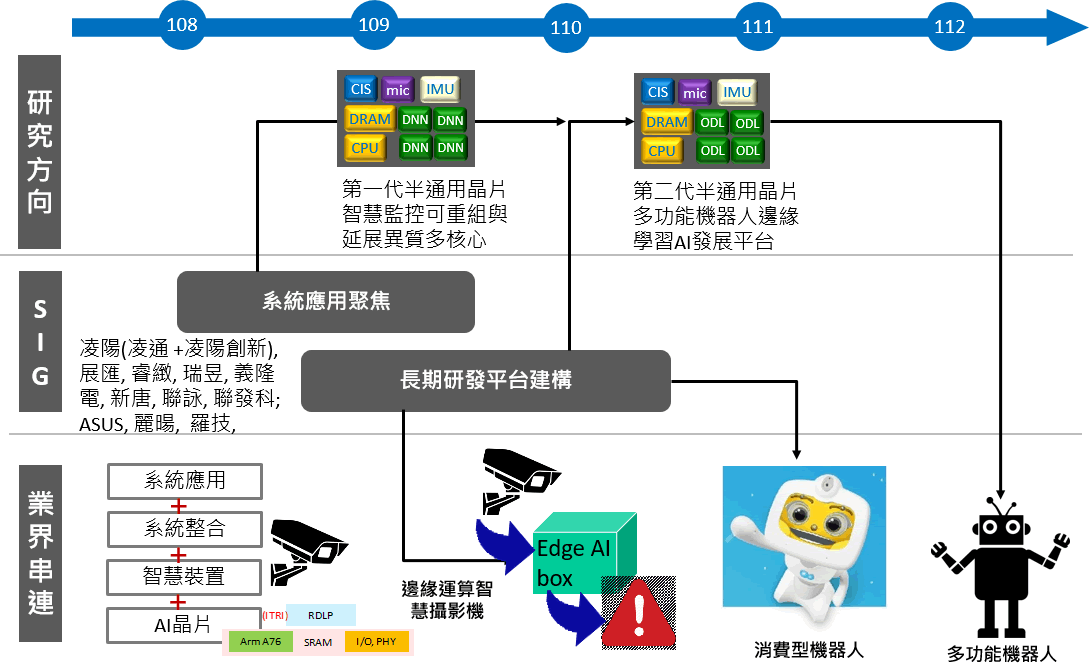

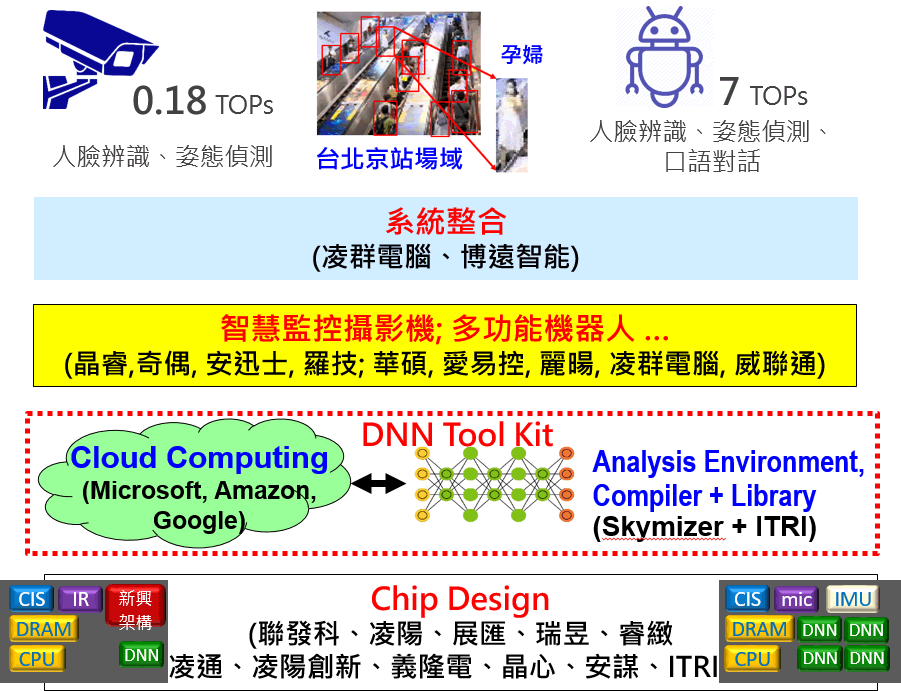

AI on Chip Taiwan Alliance (台灣人工智慧晶片聯盟)

ML Benchmark:

MLPerf

- MLPerf Inference v1.1 Results

inference-datacenter v1.1 results

inference-edge v1.1 results - MLPerf Training v1.0 Results

- MLPerf Tiny Inference Benchmark

Framework

PyTorch

Tensorflow

Tensorflow Lite

Tensorflow Lite for Microcontroller

TinyML

Tensorflow.js

MediaPipe

Open Platforms

|

|

This site was last updated December 22, 2022.