Convolutional Neural Networks

Convolutional Neural Network (CNN) includes an Overview, Examples, and Architectures.

Convolutional Neural Network (CNN)

Overview

Blog: Basic Introduction to Convolutional Neural Network in Deep Learning

Image Classification

Typical CNN

Convolutional Layers

Convolutional Operation

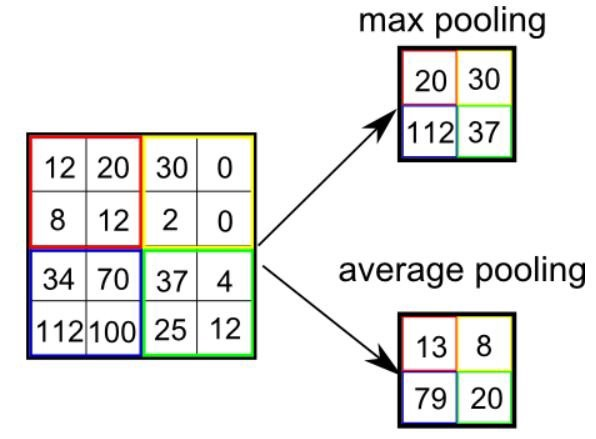

Max-Pooling

Activation Fuctions

Sigmoid vs ReLU (Rectified Linear Unit)

Tanh or hyperbolic tangent

Tanh or hyperbolic tangent

Leaky ReLU

Leaky ReLU

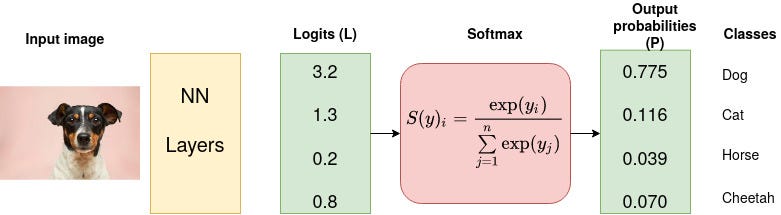

Softmax Activation function

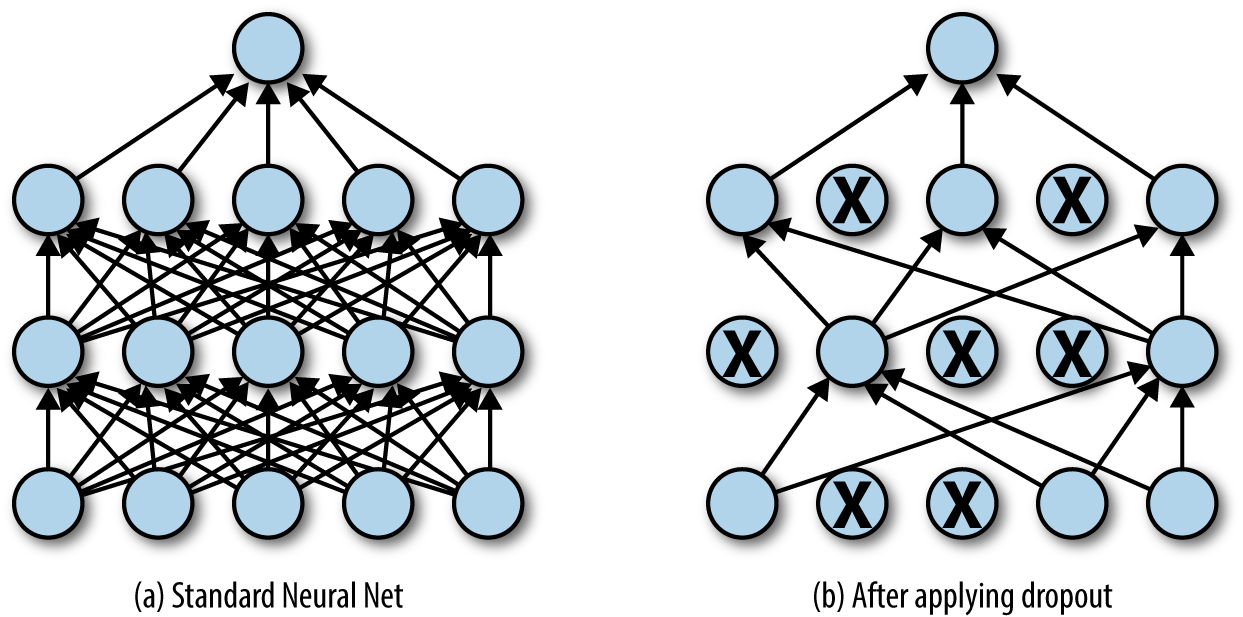

Dropout

Dropout: A Simple Way to Prevent Neural Networks from Overfitting

在模型訓練時隨機讓網絡某些隱含層節點的權重不工作,不工作的那些節點可以暫時認為不是網絡結構的一部分,但是它的權重得保留下来(只是暫時不更新而已)

Gradient Descent Optimization Algorithms

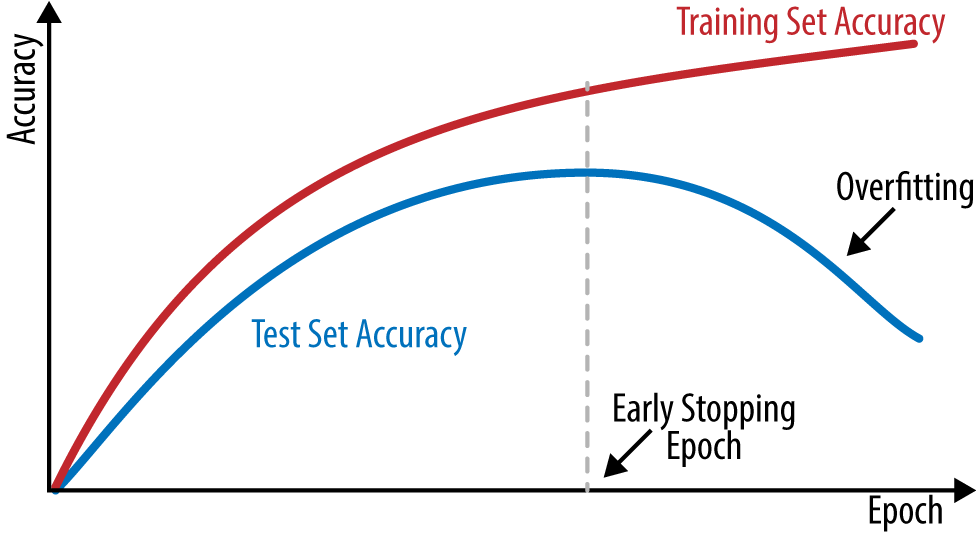

Early Stopping

Visualize Filters & Feature Maps

- Guide to Visualize Filters and Feature Maps in CNN

- VGG16 - Layers Visualization Tutorial

- How to Visualize Feature Maps in Convolutional Neural Networks using PyTorch

Examples

MNIST (手寫數字)

60000筆28x28灰階數字圖片之訓練集

10000筆28x28灰階數字圖片之測試集

Kaggle: https://www.kaggle.com/rkuo2000/mnist-cnn

mnist_cnn.py

mnist_cnn.py

from tensorflow.keras import models, layers, datasets

import matplotlib.pyplot as plt

# Load Dataset

mnist = datasets.mnist # MNIST datasets

(x_train_data, y_train),(x_test_data,y_test) = mnist.load_data()

# data normalization

x_train, x_test = x_train_data / 255.0, x_test_data / 255.0

print('x_train shape:', x_train.shape)

print('train samples:', x_train.shape[0])

print('test samples:', x_test.shape[0])

# reshape for input

x_train = x_train.reshape(-1,28,28,1)

x_test = x_test.reshape(-1,28,28,1)

# Build Model

num_classes = 10 # 0~9

model = models.Sequential()

model.add(layers.Conv2D(32, kernel_size=(5, 5),activation='relu', padding='same',input_shape=(28,28,1)))

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

model.add(layers.Conv2D(64, (5, 5), activation='relu', padding='same'))

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

model.add(layers.Dropout(0.25))

model.add(layers.Flatten())

model.add(layers.Dense(128, activation='relu'))

model.add(layers.Dropout(0.5))

model.add(layers.Dense(num_classes, activation='softmax'))

model.summary()

model.compile(loss='sparse_categorical_crossentropy', optimizer='adam',metrics=['accuracy'])

# Train Model

epochs = 12

batch_size = 128

history = model.fit(x_train, y_train, batch_size=batch_size, epochs=epochs, verbose=1, validation_data=(x_test, y_test))

# Save Model

models.save_model(model, 'models/mnist_cnn.h5')

# Evaluate Model

score = model.evaluate(x_train, y_train, verbose=0)

print('\nTrain Accuracy:', score[1])

score = model.evaluate(x_test, y_test, verbose=0)

print('\nTest Accuracy:', score[1])

print()

# Show Training History

keys=history.history.keys()

print(keys)

def show_train_history(hisData,train,test):

plt.plot(hisData.history[train])

plt.plot(hisData.history[test])

plt.title('Training History')

plt.ylabel(train)

plt.xlabel('Epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

show_train_history(history, 'loss', 'val_loss')

show_train_history(history, 'accuracy', 'val_accuracy')

hiraganamnist

Dataset: Kuzushiji-MNIST

Kaggle: https://www.kaggle.com/rkuo2000/hiraganamnist

Sign-Language MNIST

Dataset: Sign-Language MNIST

21964筆28x28彩色手勢字母圖片之訓練集

5491筆28x28彩色手勢字母圖片之測試集

Kaggle: https://www.kaggle.com/code/rkuo2000/sign-language-mnist

FashionMNIST

Dataset: FashionMNIST

![]()

28x28 grayscale images

10 classes: [T-shirt/top, Trouser, Pullover, Dress, Coat, Sandal, Shirt, Sneaker, Bag, Ankle boot]

60000 train data

10000 test data

Kaggle: https://www.kaggle.com/rkuo2000/fashionmnist-cnn

AirDigit (空中手寫數字)

Dataset:

- https://www.kaggle.com/datasets/rkuo2000/airdigit

- https://www.kaggle.com/datasets/rkuo2000/airdigit-mpu6050

Kaggle: https://www.kaggle.com/rkuo2000/airdigit-cnn

心電圖診斷理論基礎與系統

ECG 心電圖分類:

- Normal (正常心跳)

- Artial Premature (早發性心房收縮)

- 早發性心房收縮就是心房在收到指令前提早跳動,由於打出的血量少而造成心跳空虛的症狀,再加上舒張期變長,回到心臟的血流增加使下一次的心跳力道較強,造成心悸的感覺,當心臟長期處於耗能異常的狀態,就容易併發心臟衰竭。

- Premature ventricular contraction (早發性心室收縮)

- 早發性心室收縮是心律不整的一種,是指病人的心室因為電位不穩定而提早跳動,病人會有心跳停格的感覺。因為病人心跳的質量差,心臟打出的血液量不足,卻仍會消耗能量,長期下來就可能衍生出心臟衰竭等嚴重疾病。早發性心室收縮的症狀包括心悸、頭暈、冷汗和胸悶等。

- Fusion of ventricular and normal (室性融合心跳)

- 室性融合波是由於兩個節律點發出的衝動同時激動心室的一部分形成的心室綜合波,是心律失常的干擾現象範疇

- Fusion of paced and normal (節律器融合心跳)

ECG Classification (心電圖分類)

Paper: ECG Heartbeat Classification: A Deep Transferable Representation

**Kaggle: https://www.kaggle.com/rkuo2000/ecg-classification

**Kaggle: https://www.kaggle.com/rkuo2000/ecg-classification

PPG2ABP (PPG偵測動脈血壓)

Paper: PPG2ABP: Translating Photoplethysmogram (PPG) Signals to Arterial Blood Pressure (ABP) Waveforms using Fully Convolutional Neural Networks

Code: nibtehaz/PPG2ABP

Code: nibtehaz/PPG2ABP

Kaggle: https://www.kaggle.com/code/rkuo2000/ppg2ab

Sound Digit CNN (語音數字分類)

Dataset: 台語0~9

- 使用手機錄音App(如: Voice Recorder) 將語音之數字存成.wav

- 錄音時間長度= 1秒(語音錄得越長,訓練跟辨識的時間都較久)

- 訓練資料集:每個數字錄20次 0_000.wav, 0_019.wav, 1_001.wav … 1_019.wav, …. 9_000.wav, … 9_019.wav

- 測式資料集:每個數字錄2次 0_000.wav, 0_001.wav, 1_000.wav, 1_001.wav … 9_000.wav, 9_001.wav

- 尋找手機檔案夾, 將其命名為SoundDigit, 底下有兩個目錄train (訓練集)跟test (測試集) 並壓縮成SoundDigit.zip

- 上傳至你的Kaggle.com建立一個新的Dataset (例如: https://kaggle.com/rkuo2000/SoundDigitTW 是台語0~9)

Kaggle: https://www.kaggle.com/code/rkuo2000/sounddigit-cnn

- librosa to plot the waveform

for i in range(10): y, sr = librosa.load('Dataset/Train/'+str(i)+'_000.wav') plt.figure() plt.subplot(3,1,1) librosa.display.waveplot(y, sr=sr) plt.title('Waveform')

- extract MFCC spectorgram ``` x_train = list() y_train = list() (row, col) = (40,62)

for FILE in TRAIN_FILES:

filename = FILE.replace(‘.3gp’,’.wav’)

mfcc = extract_feature(‘Dataset/Train/’+filename)

# print(mfcc.shape)

if (mfcc.shape[1]!=col):

mfcc = np.resize(mfcc, (row,col))

x_train.append(mfcc)

y_train.append(FILE[0]) # first charactor of filename is the classname

if FILE[2:5]==”000”:

print(mfcc.shape)

display_mfcc(mfcc)

```

Urban Sound Classification (都市聲音分類)

Dataset: https://www.kaggle.com/chrisfilo/urbansound8k

10 classes: air conditioner, car horn, children playing, dog bark, drilling, engine idling, gun shot, jackhammer, siren, street music

Kaggle: https://www.kaggle.com/rkuo2000/urban-sound-classification

Speech Commands (語音命令辨識)

Dataset: Google Speech Commands

Speech Commands: “yes”, “no”, “up”, “down”, “left”, “right”, “on”, “off”, “stop”, “go”

Code: tensorflow/examples/speech_commands

Kaggle: https://www.kaggle.com/code/rkuo2000/qcnn-asr

CNN architectures

-

LeNet-5 (1998) Gradient-Based Learning Applied to Document Recognition

-

AlexNet (2012) ImageNet Classification with Deep Convolutional Neural Networks

-

VGG-16 (2014)

-

Inception-v1 (2014)

-

Inception-v3 (2015)

-

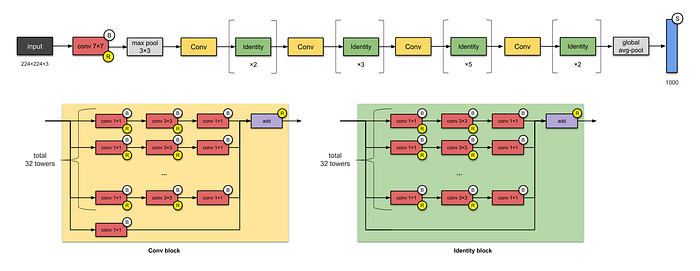

ResNet-50 (2015)

-

Xception (2016)

-

Inception-v4 (2016)

-

Inception-ResNets (2016)

-

DenseNet (2016)

-

ResNeXt-50 (2017)

-

EfficientNet (2019)

CNN model comparison

HarDNet (2019)

Paper: HarDNet: A Low Memory Traffic Network

Blog: 鎖定高解析串流分析,清大開源CNN架構HarDNet,影像分類速度比常見ResNet-50架構快30%

Code: PingoLH/Pytorch-HarDNet

GhostNet (2019)

Paper: GhostNet: More Features from Cheap Operations

Code: ghostnet_pytorch

Blog: A Dive Into GhostNet with PyTorch and TensorFlow

CSPNet (2019)

Paper: CSPNet: A New Backbone that can Enhance Learning Capability of CNN

Blog: Review — CSPNet: A New Backbone That Can Enhance Learning Capability of CNN

Code: https://github.com/WongKinYiu/CrossStagePartialNetworks

Cross Stage Partial DenseNet (CSPDenseNet)

Vision Transformer (2020)

Paper: An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale

Code: https://github.com/google-research/vision_transformer

RepVGG (2021)

Paper: Making VGG-style ConvNets Great Again

Code: https://github.com/DingXiaoH/RepVGG

NFNets (2021)

Paper: High-Performance Large-Scale Image Recognition Without Normalization

Code:

- https://github.com/deepmind/deepmind-research/tree/master/nfnets

- https://github.com/vballoli/nfnets-pytorch

Tube: https://www.youtube.com/embed/rNkHjZtH0RQ

Blog: NFNet (Normalizer-Free ResNets)論文閱讀

Pytorch Image Models (https://rwightman.github.io/pytorch-image-models/models/)

Big Transfer ResNetV2 (BiT) resnetv2.py

- Paper: Big Transfer (BiT): General Visual Representation Learning

- Code: https://github.com/google-research/big_transfer

Cross-Stage Partial Networks cspnet.py

- Paper: CSPNet: A New Backbone that can Enhance Learning Capability of CNN

- Code: https://github.com/WongKinYiu/CrossStagePartialNetworks

DenseNet densenet.py

- Paper: Densely Connected Convolutional Networks

- Code: https://github.com/pytorch/vision/tree/master/torchvision/models

DLA dla.py

- Paper: Deep Layer Aggregation

- Code: https://github.com/ucbdrive/dla

Dual-Path Networks dpn.py

GPU-Efficient Networks byobnet.py

- Paper: Neural Architecture Design for GPU-Efficient Networks

- Code: https://github.com/idstcv/GPU-Efficient-Networks

HRNet hrnet.py

- Paper: Deep High-Resolution Representation Learning for Visual Recognition

- Code: https://github.com/HRNet/HRNet-Image-Classification

Inception-V3 inception_v3.py

- Paper: Rethinking the Inception Architecture for Computer Vision

- Code: https://github.com/pytorch/vision/tree/master/torchvision/models

Inception-V4 inception_v4.py

- Paper: Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning

- Code: https://github.com/Cadene/pretrained-models.pytorch

Inception-ResNet-V2 inception_resnet_v2.py

- Paper: Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning

- Code: https://github.com/Cadene/pretrained-models.pytorch

NASNet-A nasnet.py

- Papers: Learning Transferable Architectures for Scalable Image Recognition

- Code: https://github.com/Cadene/pretrained-models.pytorch

PNasNet-5 pnasnet.py

- Papers: Progressive Neural Architecture Search

- Code: https://github.com/Cadene/pretrained-models.pytorch

EfficientNet efficientnet.py

MobileNet-V3 mobilenetv3.py

- Paper: Searching for MobileNetV3

- Code: https://github.com/tensorflow/models/tree/master/research/slim/nets/mobilenet

RegNet regnet.py

- Paper: Designing Network Design Spaces

- Code: https://github.com/facebookresearch/pycls/blob/master/pycls/models/regnet.py

RepVGG byobnet.py

ResNet, ResNeXt resnet.py

ResNet (V1B)

- Paper: Deep Residual Learning for Image Recognition

- Code: https://github.com/pytorch/vision/tree/master/torchvision/models

ResNeXt

- Paper: Aggregated Residual Transformations for Deep Neural Networks

- Code: https://github.com/pytorch/vision/tree/master/torchvision/models

ECAResNet (ECA-Net)

Res2Net res2net.py

ResNeSt resnest.py

ReXNet rexnet.py

- Paper: ReXNet: Diminishing Representational Bottleneck on CNN

- Code: https://github.com/clovaai/rexnet

Selective-Kernel Networks sknet.py

- Paper: Selective-Kernel Networks

- Code: https://github.com/implus/SKNet, https://github.com/clovaai/assembled-cnn

SelecSLS [selecsls.py]

- Paper: XNect: Real-time Multi-Person 3D Motion Capture with a Single RGB Camera

- Code: https://github.com/mehtadushy/SelecSLS-Pytorch

Squeeze-and-Excitation Networks senet.py

TResNet tresnet.py

VGG vgg.py

- Paper: Very Deep Convolutional Networks For Large-Scale Image Recognition

- Code: https://github.com/pytorch/vision/blob/master/torchvision/models/vgg.py

Vision Transformer vision_transformer.py

- Paper: An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale

- Code: https://github.com/google-research/vision_transformer

VovNet V2 and V1 vovnet.py

- Paper: CenterMask : Real-Time Anchor-Free Instance Segmentation

- Code: https://github.com/youngwanLEE/vovnet-detectron2

Xception xception.py

- Paper: [Xception: Deep Learning with Depthwise Separable Convolutions(https://arxiv.org/abs/1610.02357)

- Code: https://github.com/Cadene/pretrained-models.pytorch

Xception (Modified Aligned, Gluon) gluon_xception.py

- Paper: Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation

- Code: https://github.com/dmlc/gluon-cv/tree/master/gluoncv/model_zoo, https://github.com/jfzhang95/pytorch-deeplab-xception/

Xception (Modified Aligned, TF) aligned_xception.py

- Paper: Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation

- Code: https://github.com/tensorflow/models/tree/master/research/deeplab

This site was last updated December 22, 2022.