Object Detection

Introduction to Image Datasets, Object Detection, Object Tracking, and its Applications.

Image Datasets

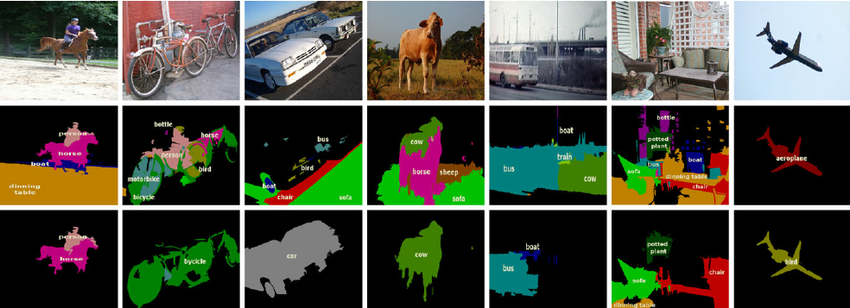

PASCAL VOC

VOC2007: 20 classes. The train/val/test 9,963 images containiing 24,640 annotated objects

VOC2012: 20 classes. The train/val data has 11,530 images containing 27,450 ROI annotated objects and 6,929 segmentations

from torchbench.semantic_segmentation import PASCALVOC

from torchvision.models.segmentation import fcn_resnet101

model = fcn_resnet101(num_classes=21, pretrained=True)

PASCALVOC.benchmark(model=model,

paper_model_name='FCN ResNet-101',

paper_arxiv_id='1605.06211')

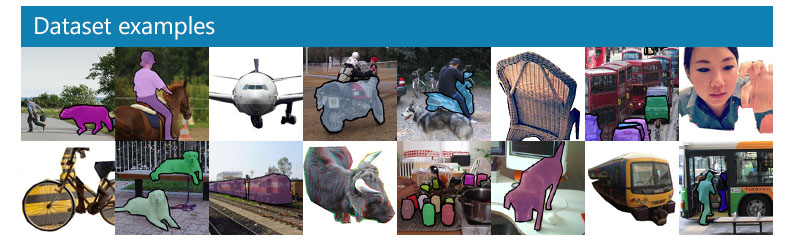

COCO Dataset

330K images (>200K labels), 1.5million object instances,80 object categories

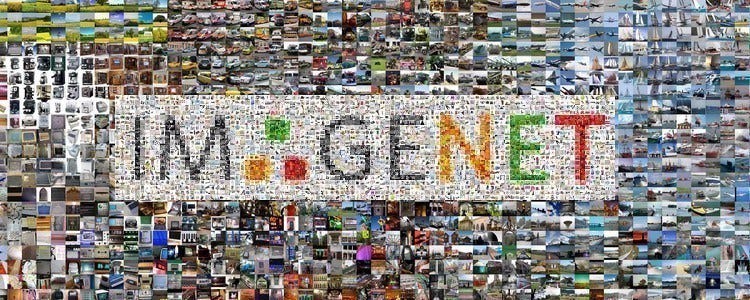

ImageNet

1000 object classes and contains 1,281,167 training images, 50,000 validation images and 100,000 test images.

Open Images Dateset

Open Images Dataset V6+:

These annotation files cover the 600 boxable object classes, and span the 1,743,042 training images where we annotated bounding boxes, object segmentations, visual relationships, and localized narratives; as well as the full validation (41,620 images) and test (125,436 images) sets.

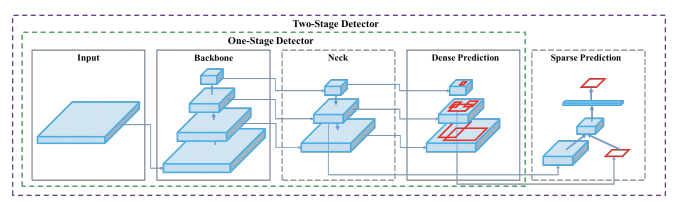

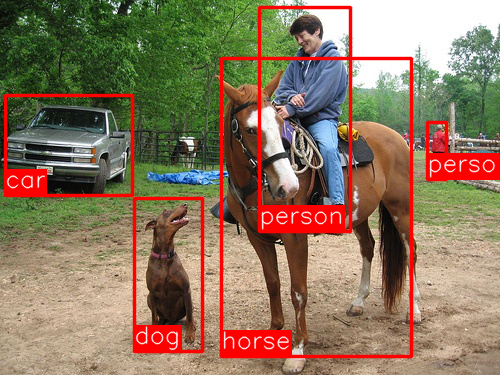

Object Detection

Object Detection Milestones

Object Detection Landscape

Blog: The Object Detection Landscape: Accuracy vs Runtime

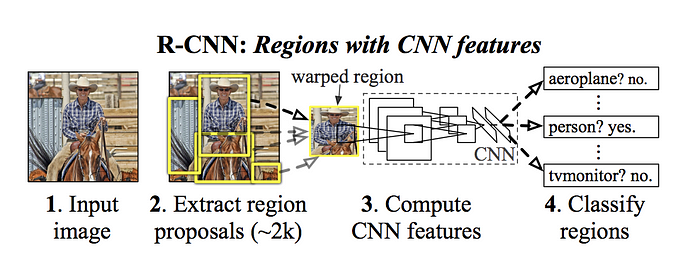

R-CNN, Fast R-CNN, Faster R-CNN

Blog: 目標檢測

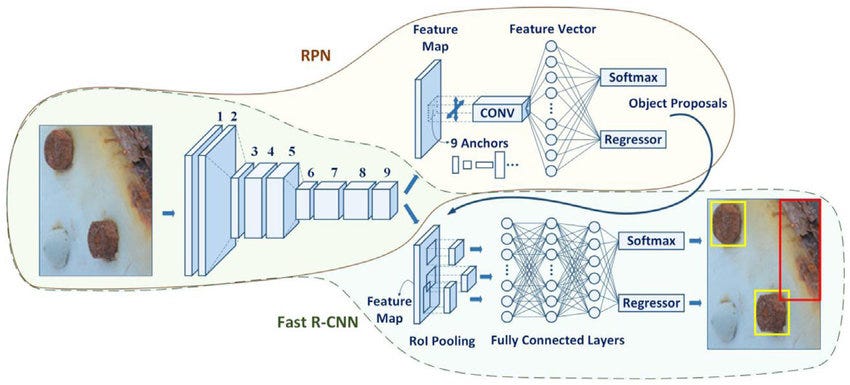

- R-CNN首先使用Selective search提取region proposals(候選框);然後用Deep Net(Conv layers)進行特徵提取;最後對候選框類別分別採用SVM進行類別分類,採用迴歸對bounding box進行調整。其中每一步都是獨立的。

- Fast R-CNN在R-CNN的基礎上,提出了多任務損失(Multi-task Loss), 將分類和bounding box迴歸作爲一個整體任務進行學習;另外,通過ROI Projection可以將Selective Search提取出的ROI區域(即:候選框Region Proposals)映射到原始圖像對應的Feature Map上,減少了計算量和存儲量,極大的提高了訓練速度和測試速度。

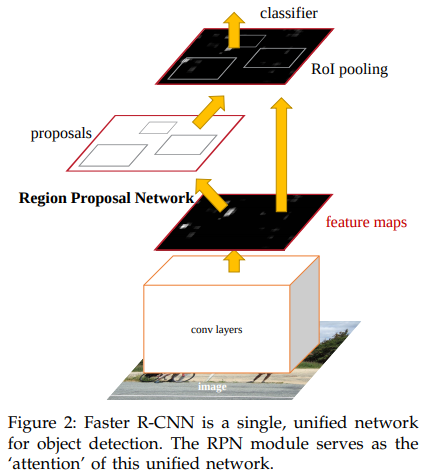

- Faster R-CNN則是在Fast R-CNN的基礎上,提出了RPN網絡用來生成Region Proposals。通過網絡共享將提取候選框與目標檢測結合成一個整體進行訓練,替換了Fast R-CNN中使用Selective Search進行提取候選框的方法,提高了測試過程的速度。

R-CNN

Paper: arxiv.org/abs/1311.2524

Fast R-CNN

Paper: arxiv.org/abs/1504.08083

Github: faster-rcnn

Faster R-CNN

Paper: arxiv.org/abs/1506.01497

Github: faster_rcnn, py-faster-rcnn

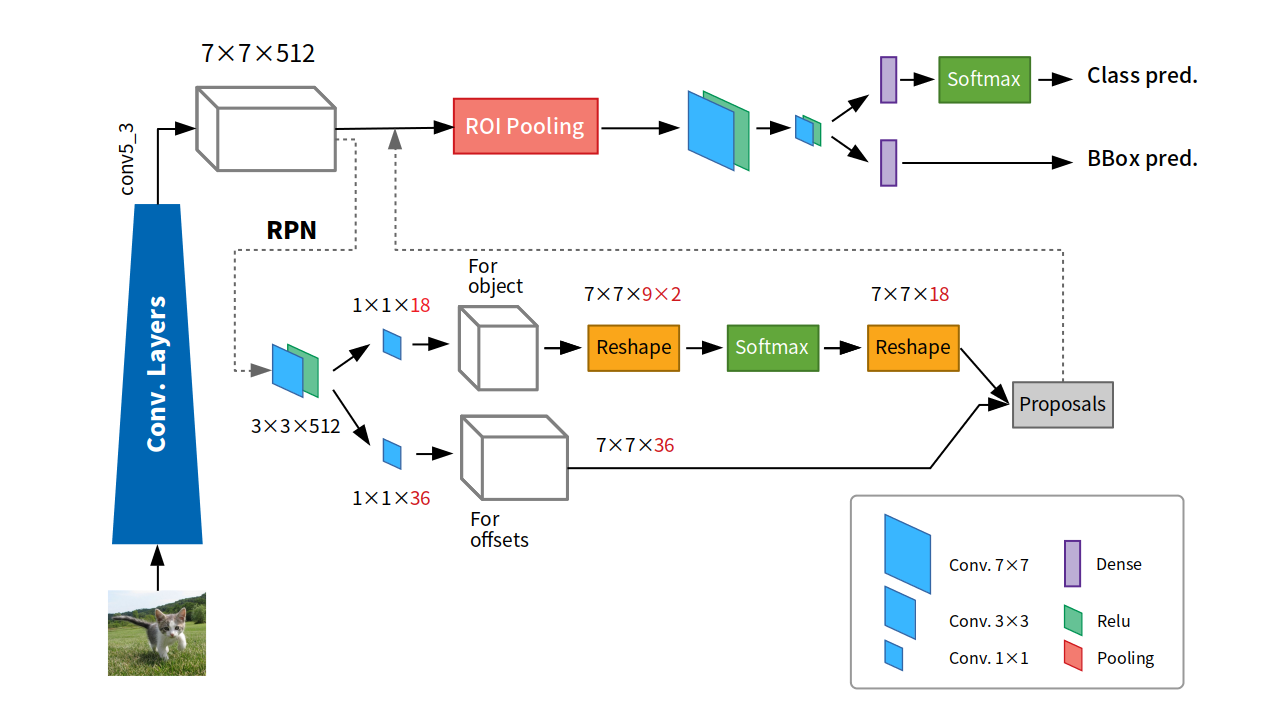

Blog: [物件偵測] S3: Faster R-CNN 簡介

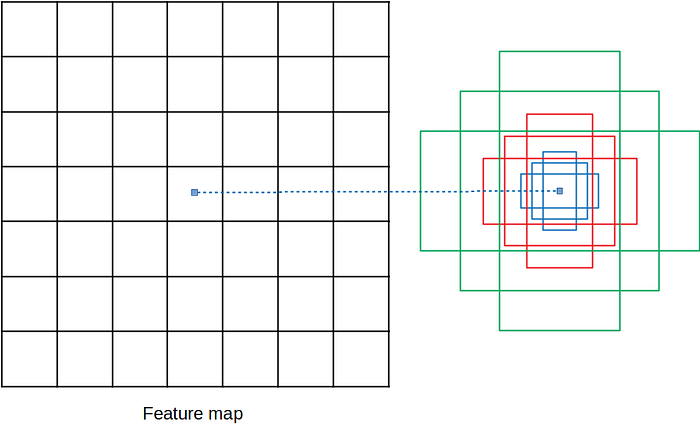

- RPN是一個要提出proposals的小model,而這個小model需要我們先訂出不同尺度、比例的proposal的邊界匡的雛形。而這些雛形就叫做anchor。

- RPN的上路是負責判斷anchor之中有無包含物體的機率,因此,1×1的卷積深度就是9種anchor,乘上有無2種情況,得18。而下路則是負責判斷anchor的x, y, w, h與ground truth的偏差量(offsets),因此9種anchor,乘上4個偏差量(dx, dy, dw, dh),得卷積深度為36。

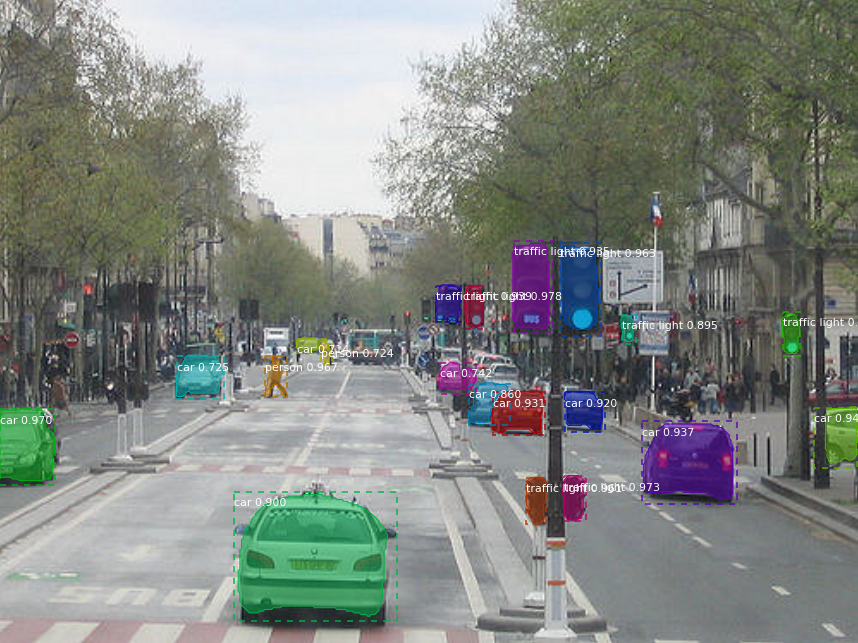

Mask R-CNN

Paper: arxiv.org/abs/1703.06870

Blog: [物件偵測] S9: Mask R-CNN 簡介

Code: matterport/Mask_RCNN

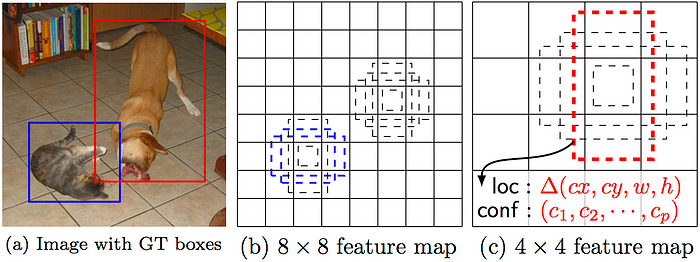

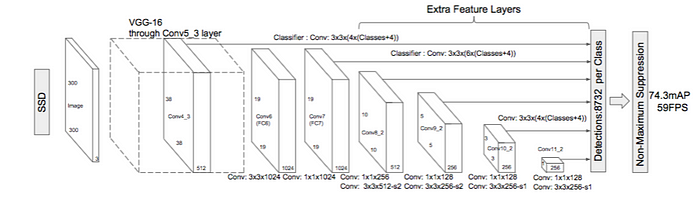

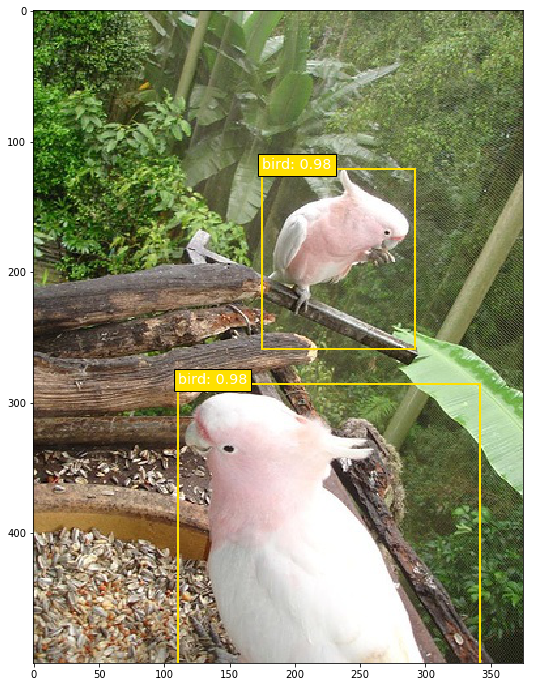

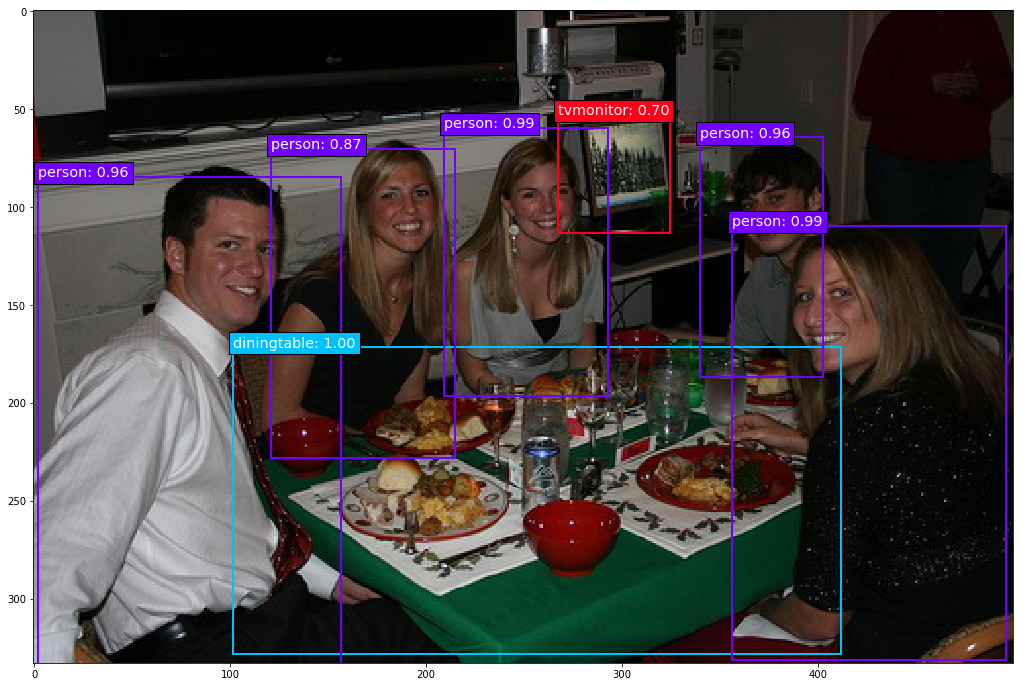

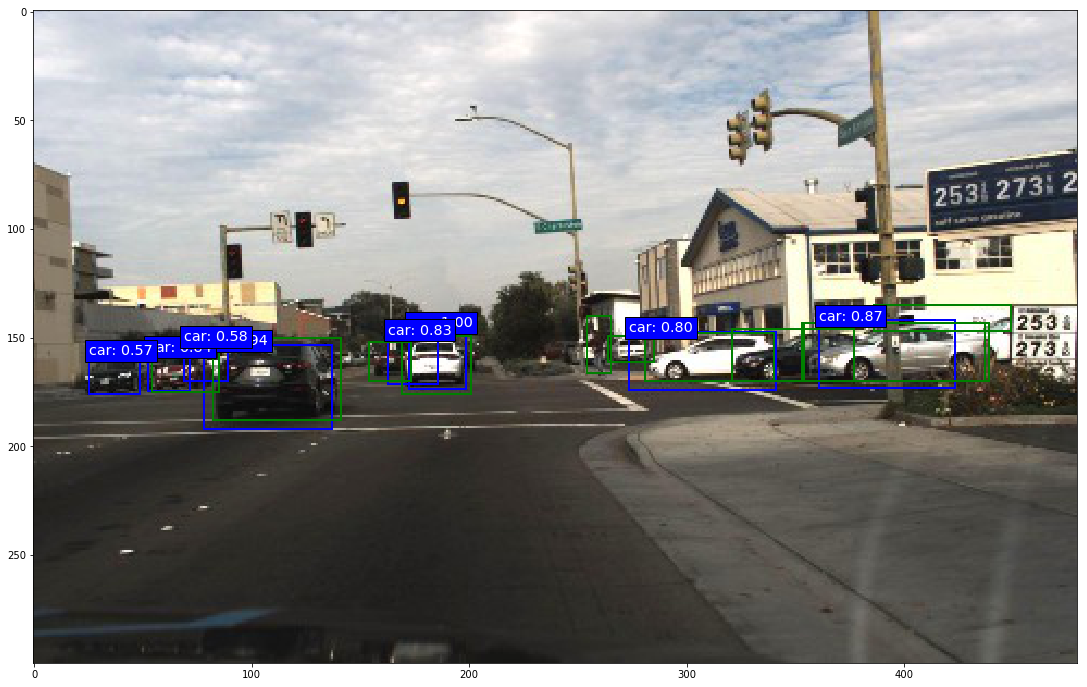

SSD: Single Shot MultiBox Detector

Paper: arxiv.org/abs/1512.02325

Blog: Understanding SSD MultiBox — Real-Time Object Detection In Deep Learning

使用神經網絡(VGG-16)提取feature map後進行分類和回歸來檢測目標物體。

使用神經網絡(VGG-16)提取feature map後進行分類和回歸來檢測目標物體。

Code: pierluigiferrari/ssd_keras

Code: pierluigiferrari/ssd_keras

|

|

|

|

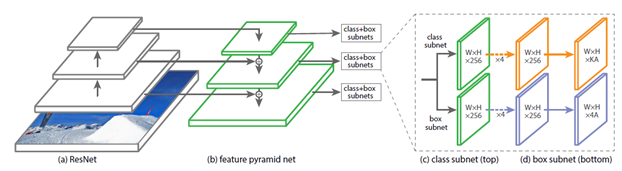

RetinaNet

Paper: Focal Loss for Dense Object Detection

Blog: RetinaNet 介紹

從左到右分別用上了

從左到右分別用上了

- 殘差網路(Residual Network ResNet)

- 特徵金字塔(Feature Pyramid Network FPN)

- 類別子網路(Class Subnet)

- 框子網路(Box Subnet)

- 以及Anchors

Blog: Review: RetinaNet — Focal Loss

Code: keras-retinanet

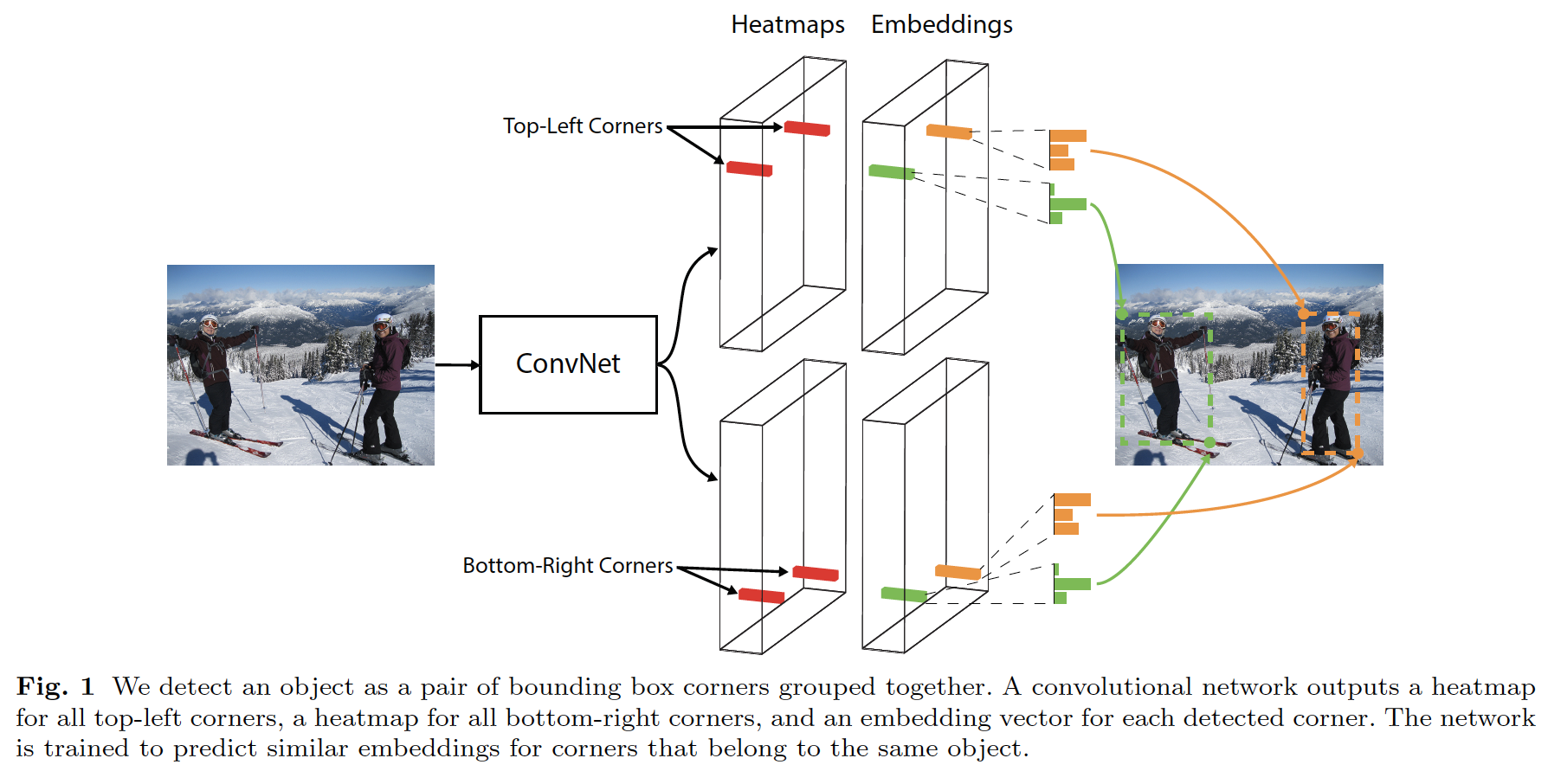

CornerNet

Paper: CornerNet: Detecting Objects as Paired Keypoints

Code: princeton-vl/CornerNet

Code: princeton-vl/CornerNet

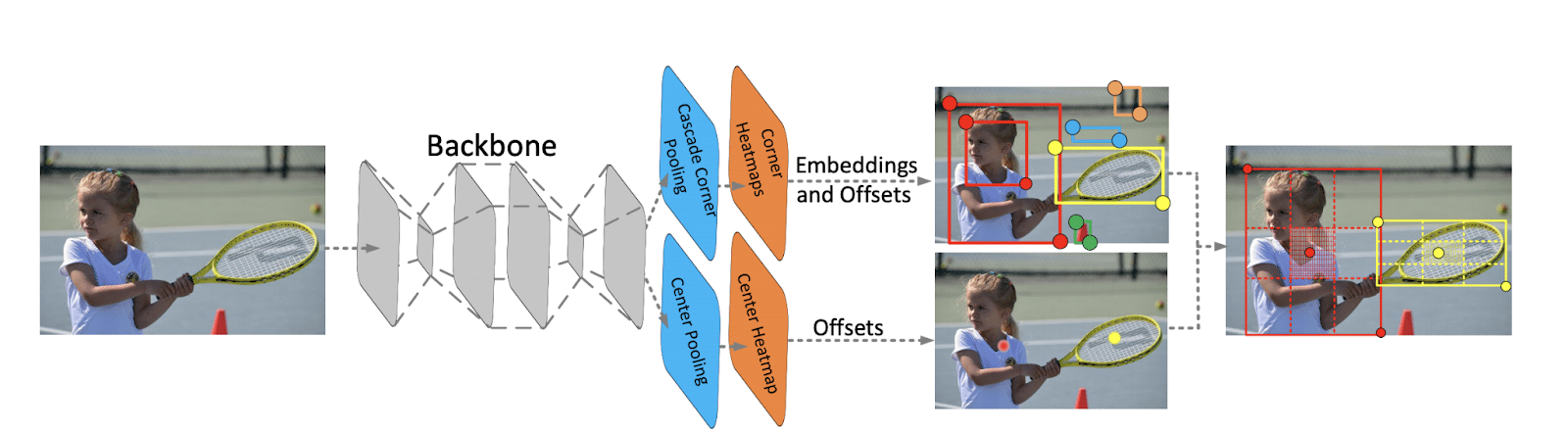

CenterNet

Paper: CenterNet: Keypoint Triplets for Object Detection

Code: xingyizhou/CenterNet

Code: xingyizhou/CenterNet

EfficientDet

Paper: arxiv.org/abs/1911.09070

Code: google efficientdet

Code: google efficientdet

|

|

Kaggle: rkuo2000/efficientdet-gwd

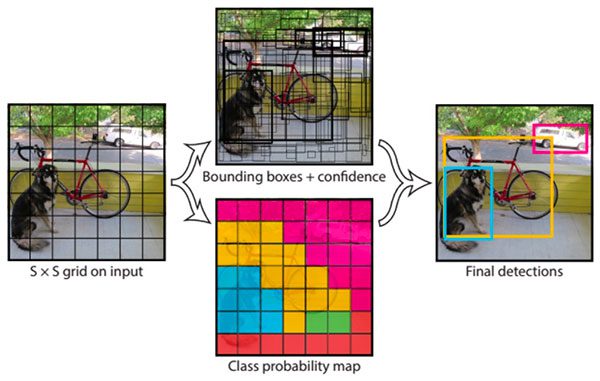

YOLO- You Only Look Once

Code: pjreddie/darknet

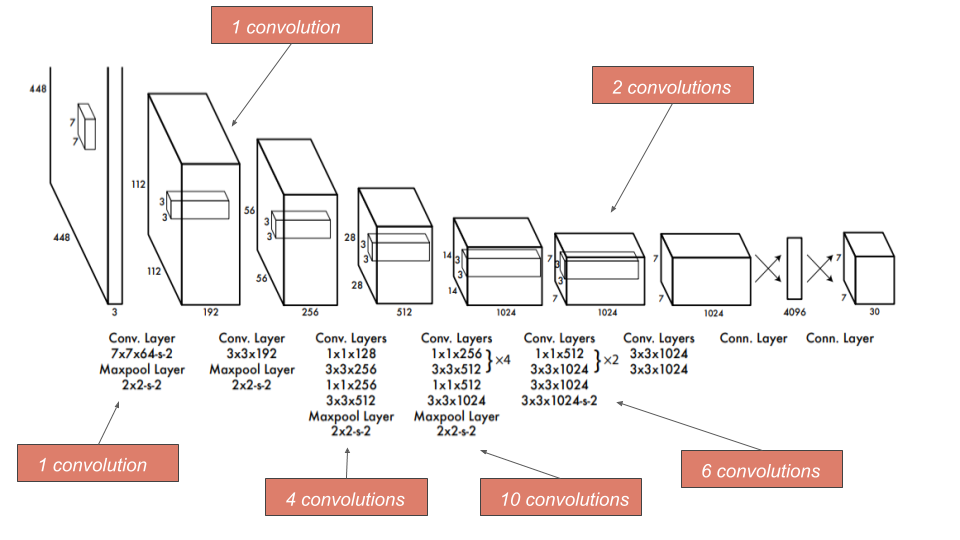

YOLOv1 : mapping bounding box

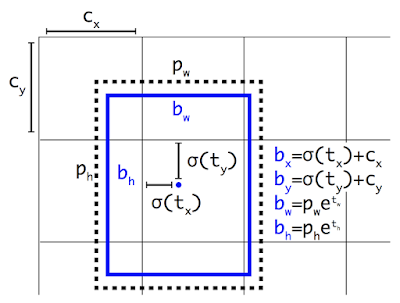

YOLOv2 : anchor box proportional to K-means

YOLOv2 : anchor box proportional to K-means

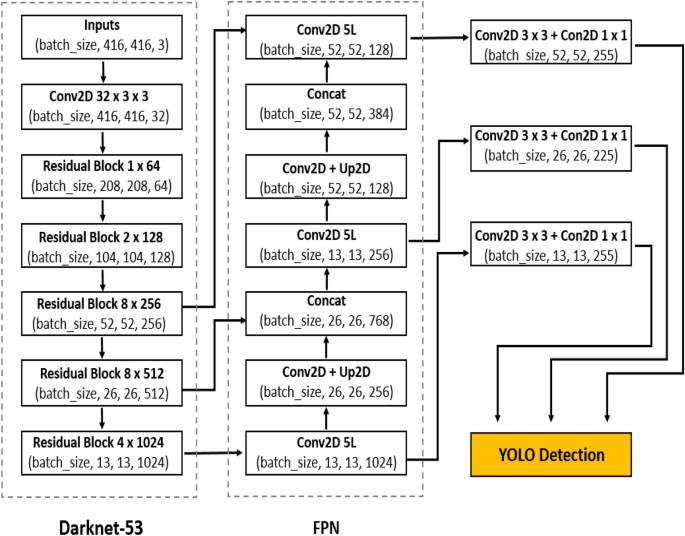

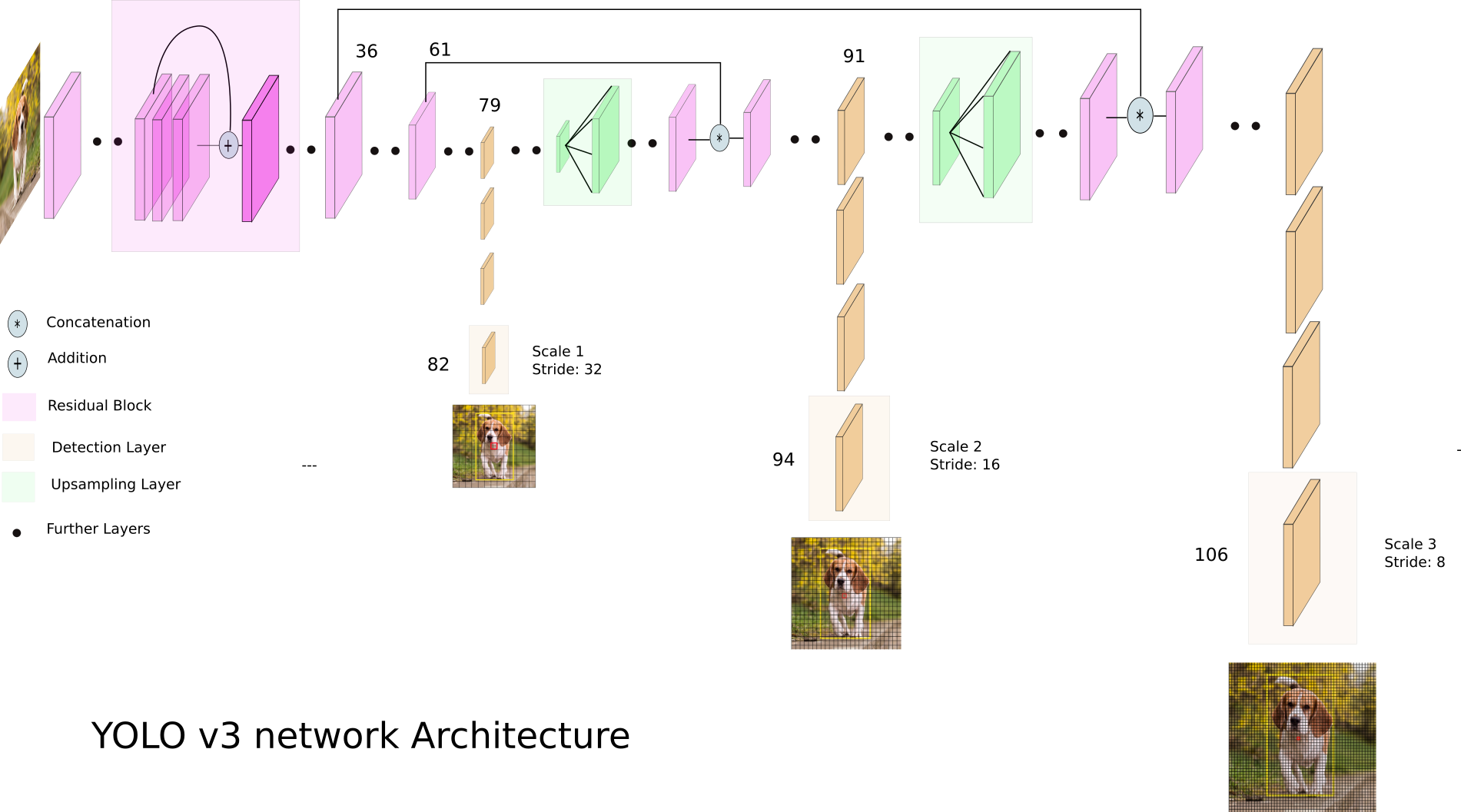

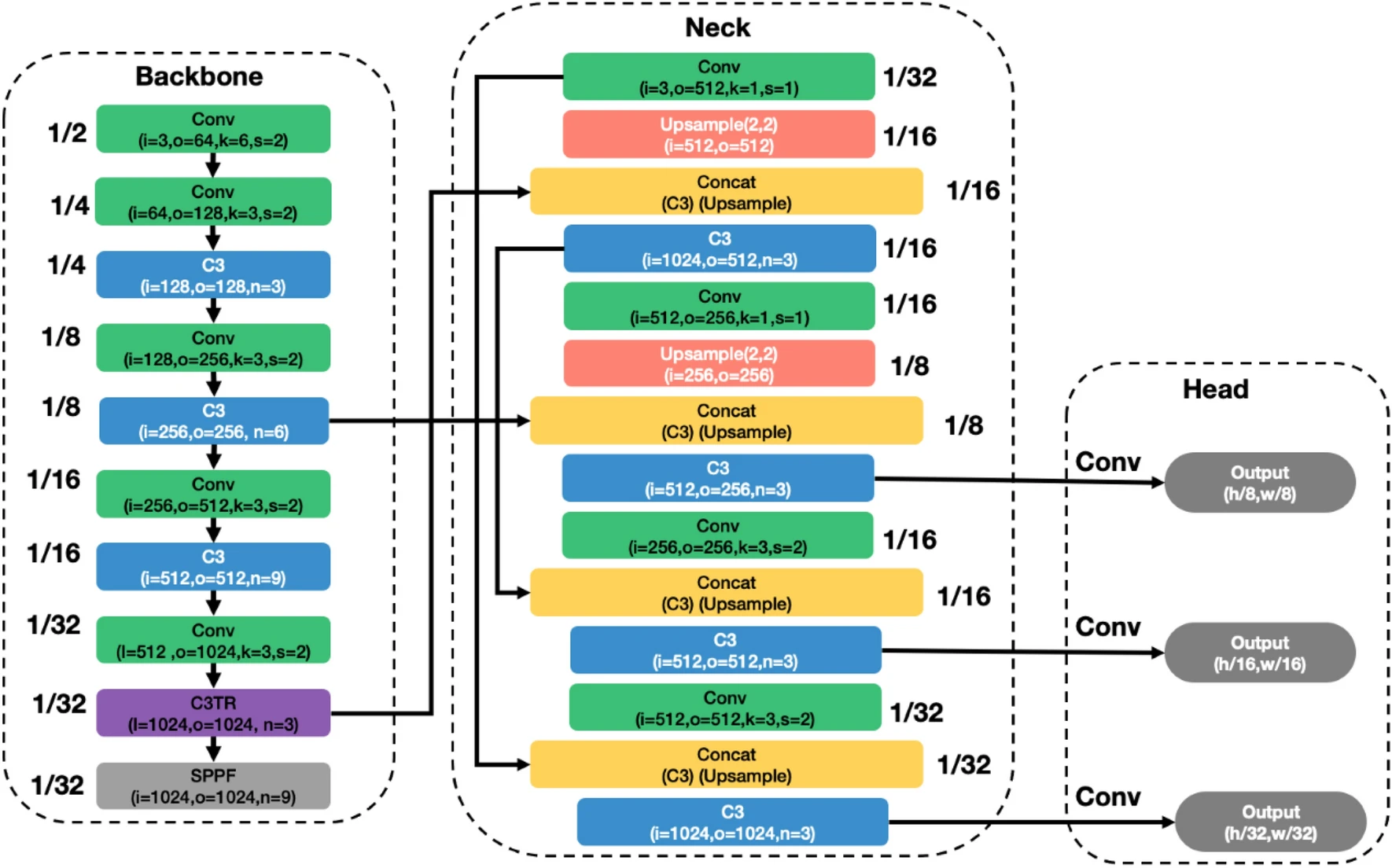

YOLOv3 : Darknet-53 + FPN

YOLOv3 : Darknet-53 + FPN

YOLObile

Paper: arxiv.org/abs/2009.05697

Blog: YOLObile:移動設備上的實時目標檢測

Code: nightsnack/YOLObile

Code: nightsnack/YOLObile

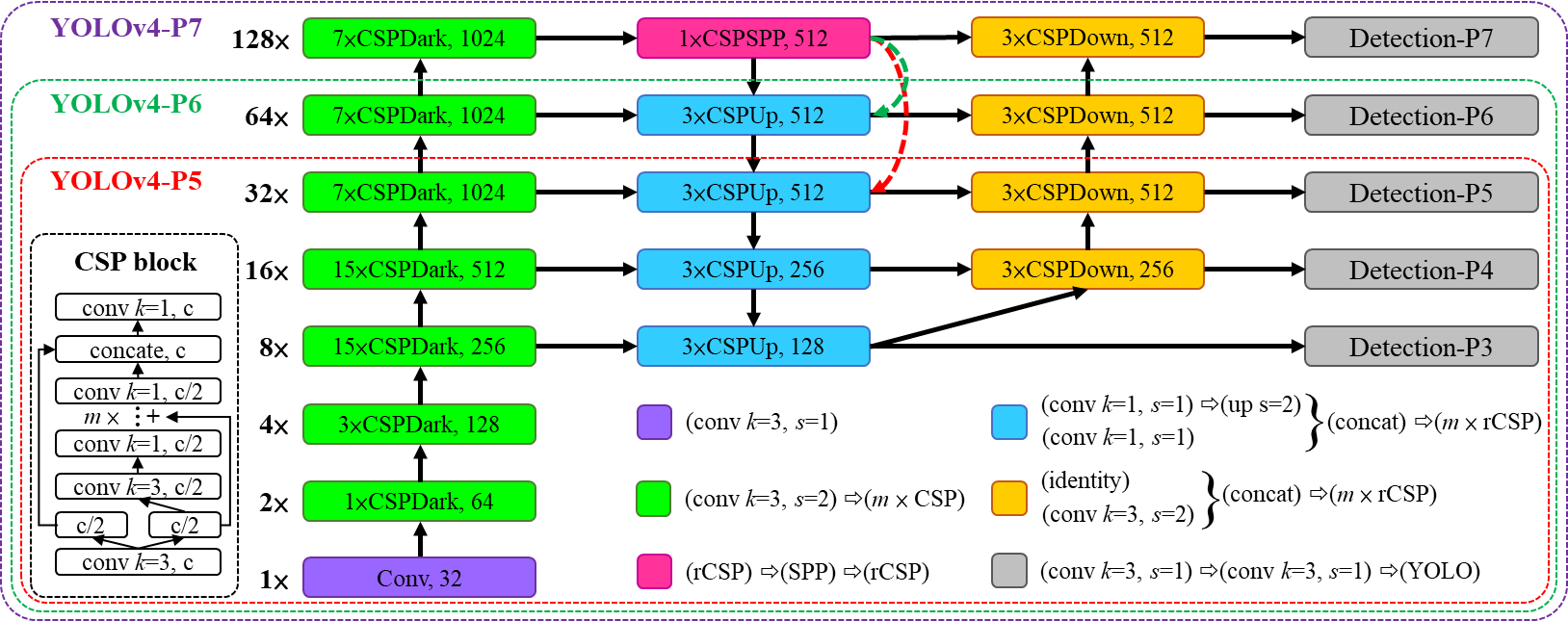

YOLOv4

Paper: YOLOv4: Optimal Speed and Accuracy of Object Detection

- YOLOv4 = YOLOv3 + CSPDarknet53 + SPP + PAN + BoF + BoS

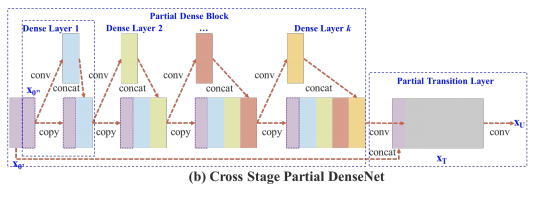

- CSP

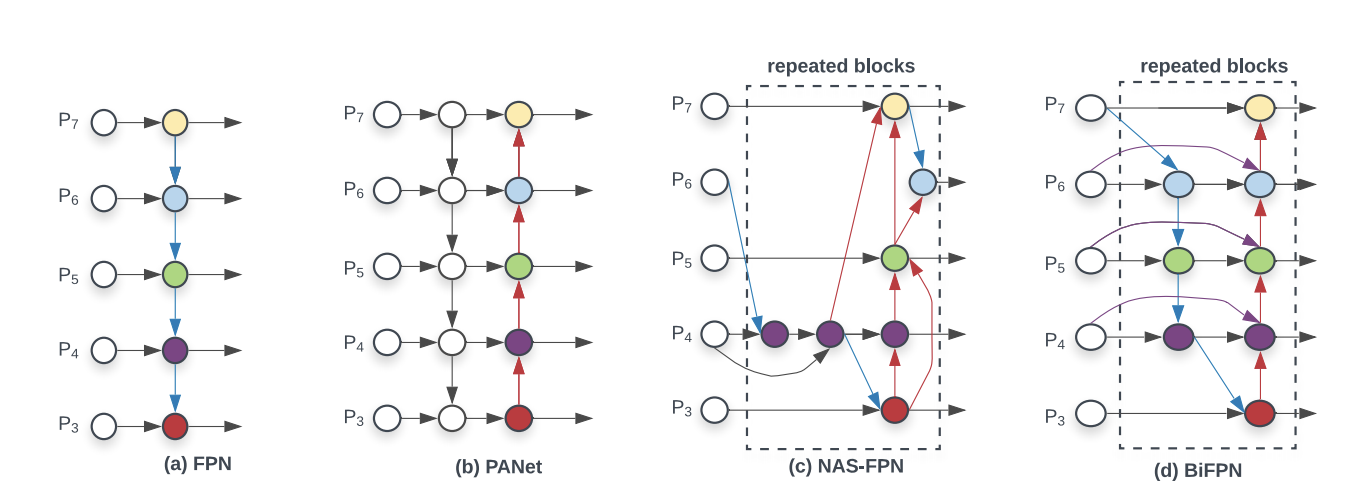

- PANet

Code: AlexeyAB/darknet

Code: WongKinYiu/PyTorch_YOLOv4

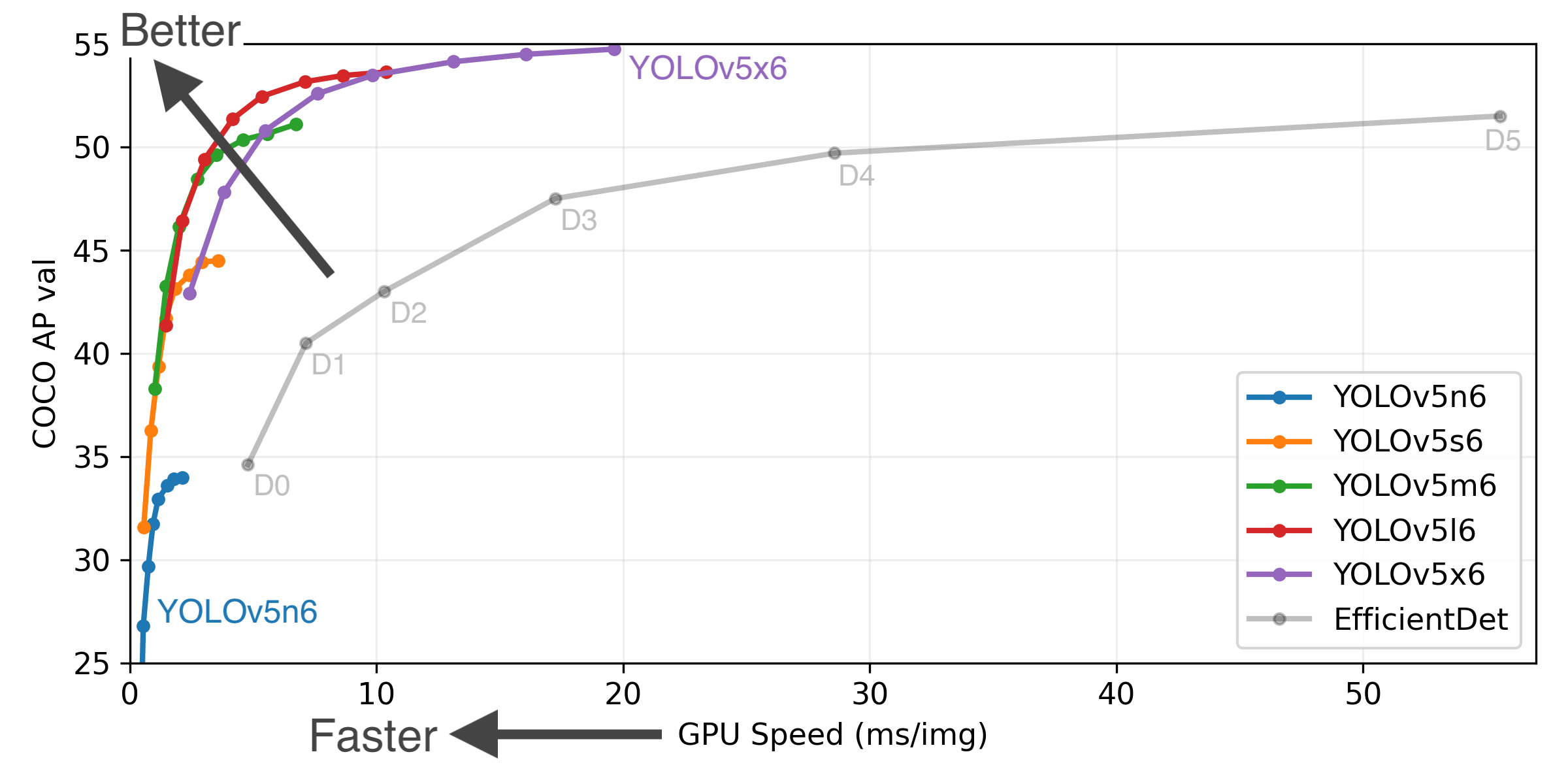

YOLOv5

Code: ultralytics/yolov5/

Code: ultralytics/yolov5/

Scaled-YOLOv4

Paper: arxiv.org/abs/2011.08036

Code: WongKinYiu/ScaledYOLOv4

Code: WongKinYiu/ScaledYOLOv4

YOLOR : You Only Learn One Representation

Paper: arxiv.org/abs/2105.04206

Code: WongKinYiu/yolor

Code: WongKinYiu/yolor

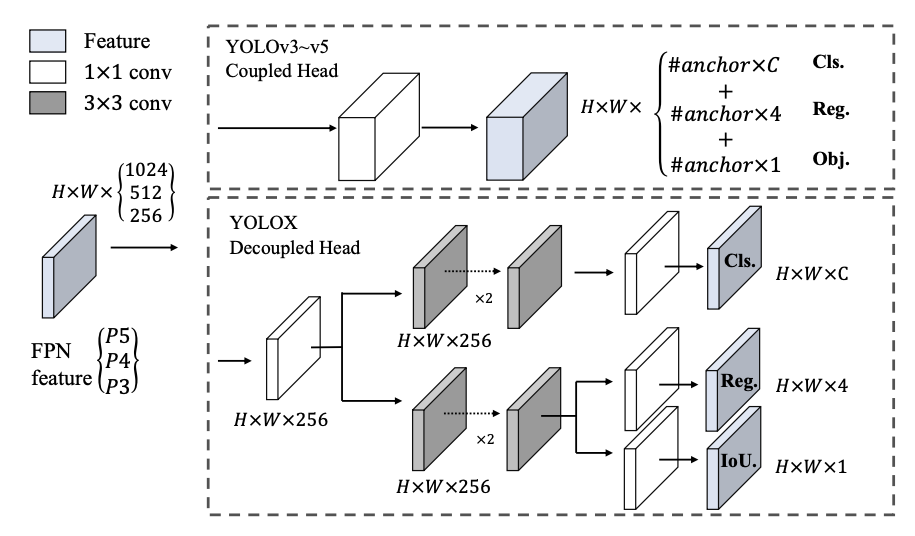

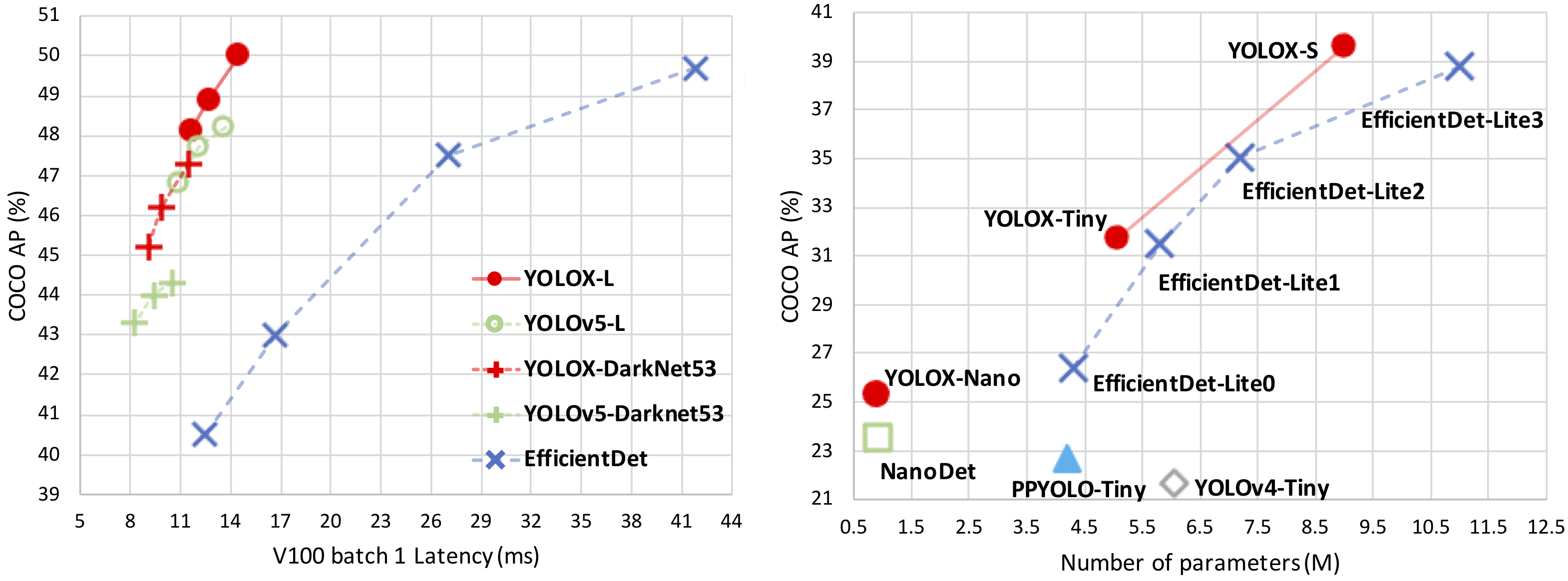

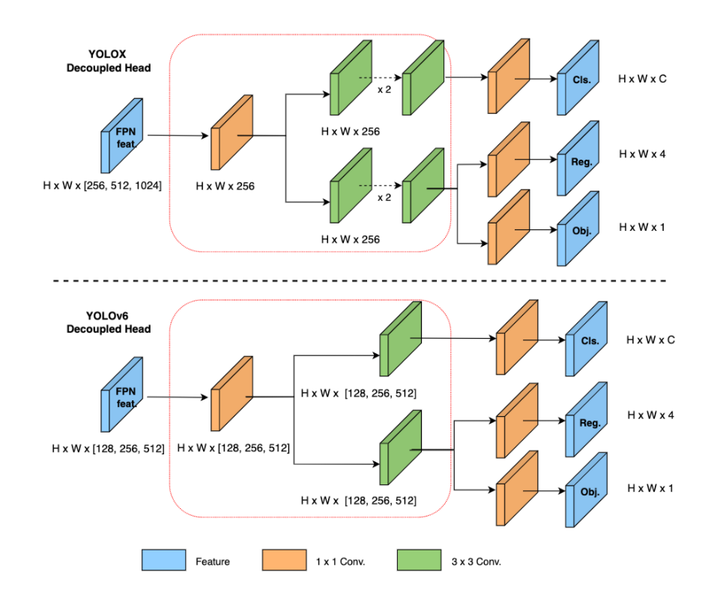

YOLOX

Paper: arxiv.org/abs/2107.08430

Code: Megvii-BaseDetection/YOLOX

Code: Megvii-BaseDetection/YOLOX

YOLOv5 vs YOLOX

Paper: Evaluation of YOLO Models with Sliced Inference for Small Object Detection

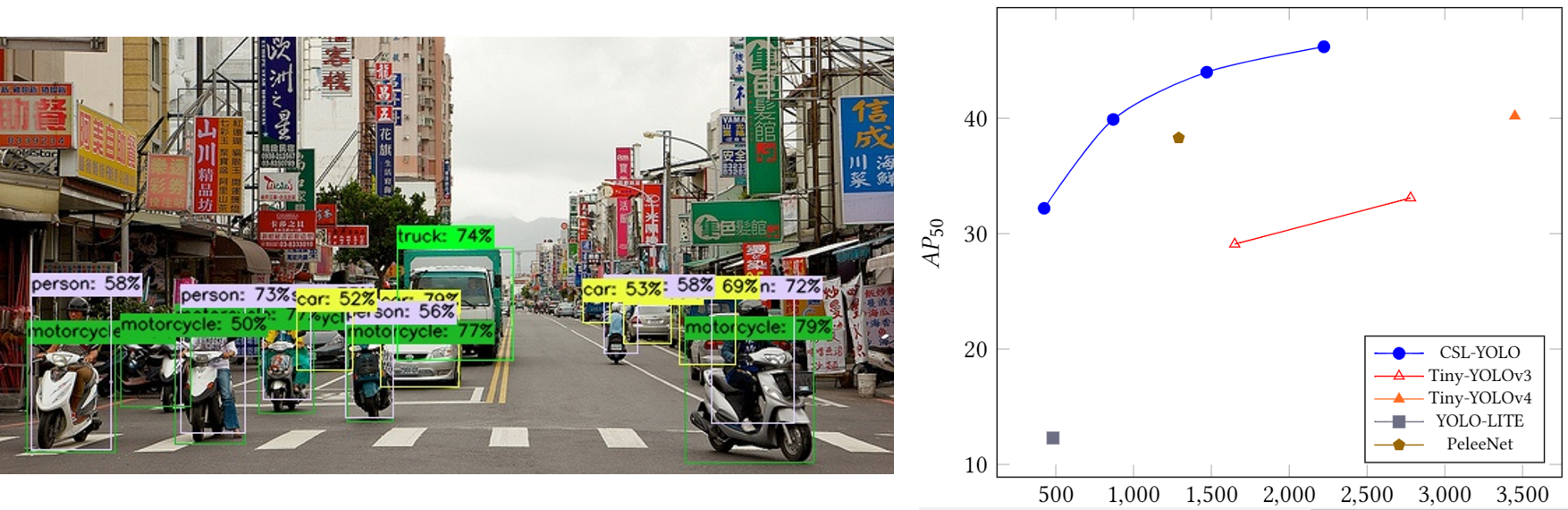

CSL-YOLO

Paper: arxiv.org/abs/2107.04829

Code: D0352276/CSL-YOLO

Camera Demo

Camera Demo

PP-YOLOE

Paper: PP-YOLOE: An evolved version of YOLO

Code: PaddleDetection

Code: PaddleDetection

Kaggle: rkuo2000/pp-yoloe

Kaggle: rkuo2000/pp-yoloe

YOLOv6

Blog: YOLOv6:又快又准的目标检测框架开源啦

- RegVGG是一種簡單又强力的CNN結構,在訓練時使用了性能高的多分支模型,而在推理時使用了速度快、省内存的單路模型,也是更具備速度和精度的均衡。

- EfficientRep將在backbone中stride=2的卷積層换成了stride=2的RepConv層。並且也將CSP-Block修改為RepBlock

- 同樣為了降低在硬體上的延遲,在Neck上的特徵融合結構中也引入了Rep結構。在Neck中使用的是Rep-PAN。

- 和YOLOX一樣,YOLOv6也對檢測頭近行了解耦,分開了邊框回歸與類别分類的過程。

Code: meituan/YOLOv6

YOLOv7

Paper: YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors

- Extended efficient layer aggregation networks

- Model scaling for concatenation-based models

- Planned re-parameterized convolution

- Coarse for auxiliary and fine for lead head label assigner

Code: WongKinYiu/yolov7

Trash Detection

Localize and Classify Wastes on the Streets

Paper: arxiv.org/abs/1710.11374

Model: GoogLeNet

Street Litter Detection

Code: isaychris/litter-detection-tensorflow

TACO: Trash Annotations in Context

Paper: arxiv.org/abs/2003.06875

Code: pedropro/TACO

Model: Mask R-CNN

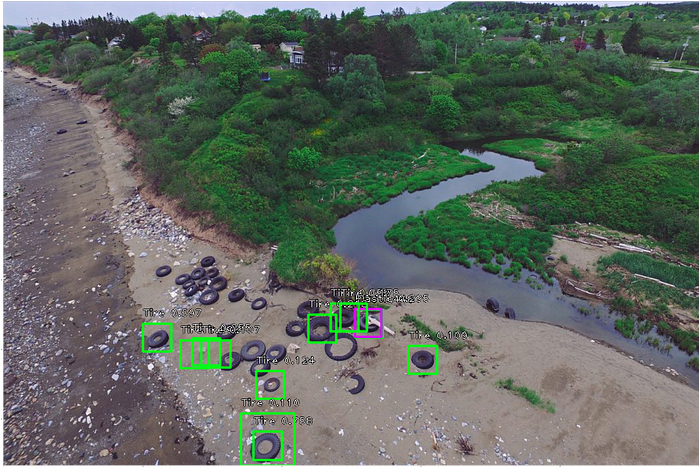

Marine Litter Detection

Paper: arxiv.org/abs/1804.01079

Dataset: Deep-sea Debris Database

Marine Debris Detection

Ref. Detect Marine Debris from Aerial Imagery

Code: yhoztak/object_detection

Model: RetinaNet

UDD dataset

Paper: A New Dataset, Poisson GAN and AquaNet for Underwater Object Grabbing

Dataset: UDD_Official

Concretely, UDD consists of 3 categories (seacucumber, seaurchin, and scallop) with 2,227 images

Detecting Underwater Objects (DUO)

Paper: A Dataset And Benchmark Of Underwater Object Detection For Robot Picking

Dataset: DUO

Object Tracking Datasets

Paper: Deep Learning in Video Multi-Object Tracking: A Survey

Multiple Object Tracking (MOT)

Under-water Ojbect Tracking (UOT)

Paper: Underwater Object Tracking Benchmark and Dataset

UOT32

UOT100

Re3 : Real-Time Recurrent Regression Networks for Visual Tracking of Generic Objects

Paper: arxiv.org/abs/1705.06368

Code: moorejee/Re3

Deep SORT

Paper: arxiv.org/abs/1703.07402

Code: nwojke/deep_sort

Blog: Deep SORT多目标跟踪算法代码解析(上)

- Kalman Filter to create “Track”, associate track_i with incoming detection_k

- A distance metric (squared Mahalanobis distance) to quantify the association

- an efficient algorithm (standard Hungarian algorithm) to associate the data

SiamCAR

Paper: arxiv.org/abs/1911.07241

Code: ohhhyeahhh/SiamCAR

YOLOv5 + DeepSort

Code: HowieMa/DeepSORT_YOLOv5_Pytorch

Yolov5 + StrongSORT with OSNet

Code: Yolov5_StrongSORT_OSNet

|

|

SiamBAN

Paper: arxiv.org/abs/2003.06761

Code: hqucv/siamban

Blog: [CVPR2020][SiamBAN] Siamese Box Adaptive Network for Visual Tracking

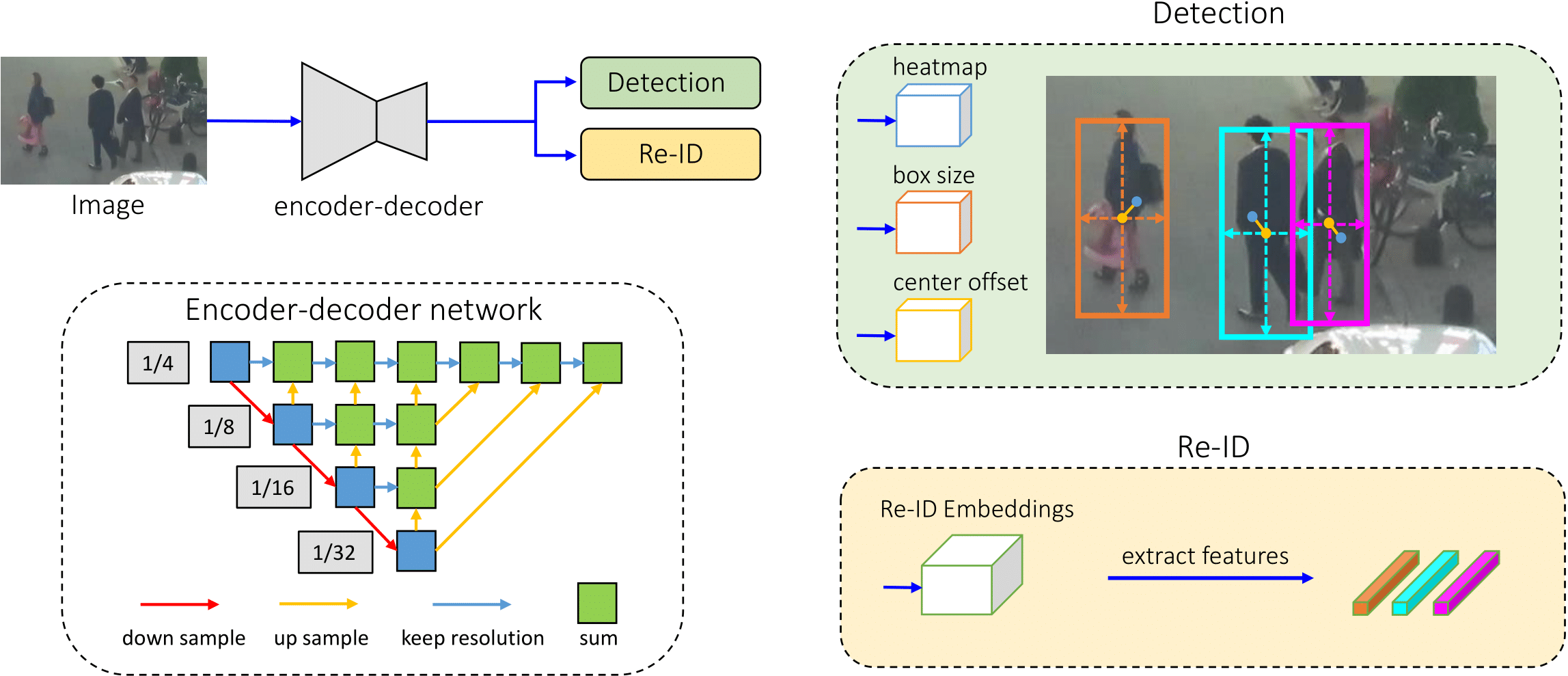

FairMOT

Paper: FairMOT: On the Fairness of Detection and Re-Identification in Multiple Object Tracking

Code: ifzhang/FairMOT

3D-ZeF

Paper: arxiv.org/abs/2006.08466

Code: mapeAAU/3D-ZeF

UAV-based Object Detection and Tracking

Paper: Deep Learning for UAV-based Object Detection and Tracking: A Survey

Efficient Object Detection Model for Real-Time UAV Applications

Paper: Efficient Object Detection Model for Real-Time UAV Applications

Satellite Image Deep Learning

T-CNN : Tubelets with CNN

Paper: arxiv.org/abs/1604.02532

Blog: 人工智慧在太空的應用

Swimming Pool Detection

Dataset: Aerial images of swimming pools

Kaggle: Evaluation Efficientdet - Swimming Pool Detection

Pool-Detection

Code: https://github.com/yacine-benbaccar/Pool-Detection

Identify Military Vehicles in Satellite Imagery

Blog: Identify Military Vehicles in Satellite Imagery with TensorFlow

Dataset: Moving and Stationary Target Acquisition and Recognition (MSTAR) Dataset

The MSTAR dataset was prdocued between 1995-1997 in a collaboration between DARPA/Air Force Research Laboratories. It contains roughly 1000 SAR images of 8 different vehicle types:

The MSTAR dataset was prdocued between 1995-1997 in a collaboration between DARPA/Air Force Research Laboratories. It contains roughly 1000 SAR images of 8 different vehicle types:

- 2S1 Gvozdika — Self-propelled artillery

- ZSU-23–4 Shilka — Self-propelled anti-aircraft

- BRDM-2 — Amphibious armored scout car

- BTR-60 — Armored personnel carrier

- D7 — Caterpillar Bulldozer

- ZIL-131 — Military cargo truck

- T-62 — Main battle tank

- T-72 — main battle tank (2nd Gen)

- SLICY — Structure acting as a ‘ground truth’ (not a vehicle)

Code: Target Recognition in Sythentic Aperture Radar Imagery Using Deep Learning

script.ipynb

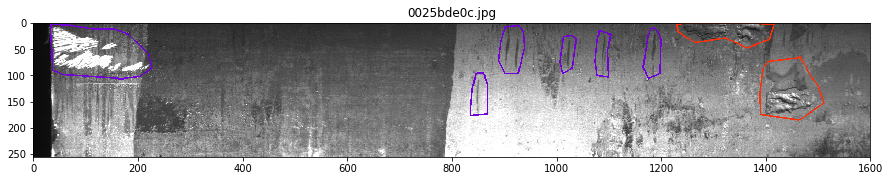

Steel Defect Detection

Dataset: Severstal: Steel Defect Detection

Steel Defect Detection using UNet

Kaggle: https://www.kaggle.com/code/jaysmit/u-net (Keras UNet)

Kaggle: https://www.kaggle.com/code/myominhtet/steel-defection (pytorch UNet

Steel-Defect Detection Using CNN

Code: https://github.com/himasha0421/Steel-Defect-Detection

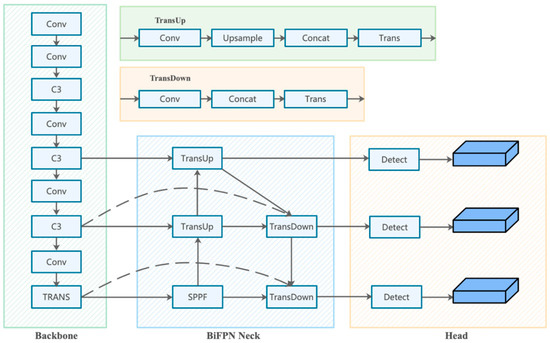

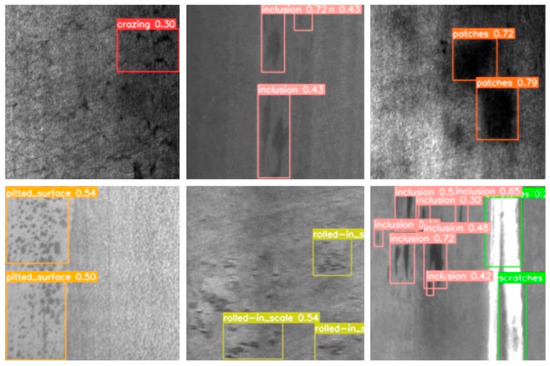

MSFT-YOLO

Paper: MSFT-YOLO: Improved YOLOv5 Based on Transformer for Detecting Defects of Steel Surface

PCB Defect Detection

PCB Datasets

PCB Defect Detection

Paper: PCB Defect Detection Using Denoising Convolutional Autoencoders

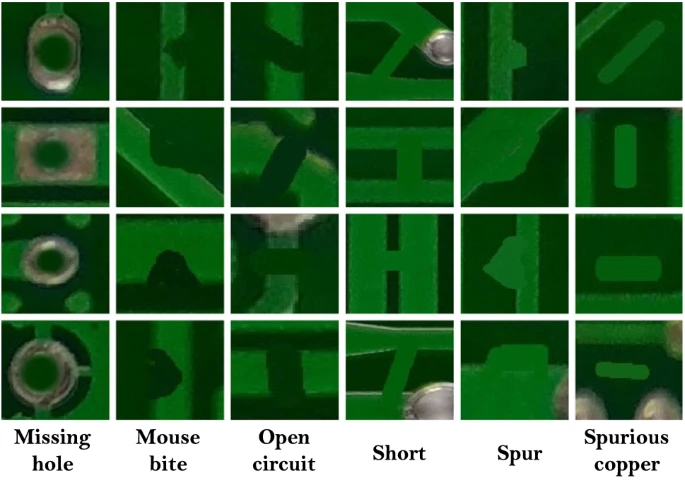

PCB Defect Classification

Dataset: HRIPCB dataset (dropbox)

印刷电路板(PCB)瑕疵数据集。它是一个公共合成PCB数据集,包含1386张图像,具有6种缺陷(漏孔、鼠咬、开路、短路、杂散、杂铜),用于图像检测、分类和配准任务。

Paper: End-to-end deep learning framework for printed circuit board manufacturing defect classification

This site was last updated December 22, 2022.