Autonomous Driving

Autonomous Driving includes SAE, Surveys, Datasets, Camera-based / LiDAR-based Methods, End-to-End Learning, Car Simulators, and Road Tests, Self-Driving 2.0

SAE Levels of Autonomy

The Society of Automotive Engineers (SAE) divided levels of driving automation into 6 categories, ranging from 0 (fully manual) to 5 (fully autonomous).

-

Level 0: No Automation

The cars we are normally driving right now. The driver performs all driving tasks with zero autonomy. -

Level 1: Driver Assistance

Drivers are still driving, but they could be assisted by the car on one task. Examples include automatic braking or auto-steering. -

Level 2: Partial Automation

The cars would have at least two automated functions. The car can drive completely on its own in specific situations such as parking. -

Level 3: Conditional Automation

The car can handle “dynamic driving tasks” but still needs instantaneous intervention when the car requests. It is not equipped with a fail-safe yet. -

Level 4: High Automation

The level that many companies aim for nowadays. The cars are officially driverless under certain conditions. -

Level 5: Full Automation

The vehicle is able to perform all driving functions under all conditions. Some might even get rid of the steering wheels.

automakers/software companies

There are mainly three aspects of self-driving in the industry right now, consumer cars, robo-taxi, and driverless trucking.

- Robo-Taxi

Basically, a robo-taxi is an Uber without a driver. That being said, a robo-taxi is clearly an L4 or L5-level self-driving car, which is the ultimate futuristic smart city goal of humanity. It would optimize the rideshare network. People would no longer have to keep a car themselves nor do they worry about the traffic jam getting to work.

Current companies working on it include but are not limited to Waymo, AutoX, Cruise, DiDi, Pony.ai, Zoox, Aurora, Motional, Optimus Ride (acquired by Magna), Tesla, Baidu, etc. Specifically, Waymo is the first to open up its public driverless taxi program in Pheonix in 2020. AutoX opened Its Fully Driverless RoboTaxi Service to the Public in China around Jan 2021, and Cruise recently (Feb 2022) opened up its driverless cars in San Francisco to the public. On a side note, Ford- and VW- backed Argo AI just got shut down on Oct. 27th, 2022.

-

Consumer Cars

The leading company in this realm is no doubt Tesla, which has been selling millions of its self-manufactured vehicles all over the world. Tesla has also released its Full Self-driving (FSD) software based on subscription to help drivers navigate through urban areas. Not to be tricked by the name though, as the system is still a level 2. Xpeng is also doing quite well in China, a main competitor of Tesla. Other competitors include but are not limited to Mobileye, Nvidia, Apple, GM, Ford, Volvo, Qualcomm, etc. -

Driverless Trucking

The leading companies include but are not limited to Aurora, TuSimple, Embark Trucks, and Daimler Truck.

Autonomous Vehicles | Self-Driving Vehicles Enacted Legislation

NCSL has a NEW autonomous vehicles legislative database, providing up-to-date, real-time information about state autonomous vehicle legislation that has been introduced in the 50 states and the District of Columbia.

Surveys

A Survey of Autonomous Driving: Common Practices and Emerging Technologies

Explanations in Autonomous Driving: A Survey

Autonomous Driving with Deep Learning: A Survey of State-of-Art Technologies

Open-Source Autonomous Driving Datasets

-

A2D2 Dataset for Autonomous Driving

Released by Audi, the Audi Autonomous Driving Dataset (A2D2) was released to support startups and academic researchers working on autonomous driving. The dataset includes over 41,000 labeled with 38 features. Around 2.3 TB in total, A2D2 is split by annotation type (i.e. semantic segmentation, 3D bounding box). In addition to labelled data, A2D2 provides unlabelled sensor data (~390,000 frames) for sequences with several loops. -

ApolloScape Open Dataset for Autonomous Driving

Part of the Apollo project for autonomous driving, ApolloScape is an evolving research project that aims to foster innovation across all aspects of autonomous driving, from perception to navigation and control. Via their website, users can explore a variety of simulation tools and over 100K street view frames, 80k lidar point cloud and 1000km trajectories for urban traffic.

-

Argoverse Dataset

Argoverse is made up of two datasets designed to support autonomous vehicle machine learning tasks such as 3D tracking and motion forecasting. Collected by a fleet of autonomous vehicles in Pittsburgh and Miami, the dataset includes 3D tracking annotations for 113 scenes and over 324,000 unique vehicle trajectories for motion forecasting. Unlike most other open source autonomous driving datasets, Argoverse is the only modern AV dataset that provides forward-facing stereo imagery. -

Berkeley DeepDrive Dataset

Also known as BDD 100K, the DeepDrive dataset gives users access to 100,000 annotated videos and 10 tasks to evaluate image recognition algorithms for autonomous driving. The dataset represents more than 1000 hours of driving experience with more than 100 million frames, as well as information on geographic, environmental, and weather diversity. -

CityScapes Dataset

CityScapes is a large-scale dataset focused on the semantic understanding of urban street scenes in 50 German cities. It features semantic, instance-wise, and dense pixel annotations for 30 classes grouped into 8 categories. The entire dataset includes 5,000 annotated images with fine annotations, and an additional 20,000 annotated images with coarse annotations.

-

Comma2k19 Dataset

This dataset includes 33 hours of commute time recorded on highway 280 in California. Each 1-minute scene was captured on a 20km section of highway driving between San Jose and San Francisco. The data was collected using comma EONs, which features a road-facing camera, phone GPS, thermometers and a 9-axis IMU. -

Google-Landmarks Dataset

Published by Google in 2018, the Landmarks dataset is divided into two sets of images to evaluate recognition and retrieval of human-made and natural landmarks. The original dataset contains over 2 million images depicting 30 thousand unique landmarks from across the world. In 2019, Google published Landmarks-v2, an even larger dataset with 5 million images and 200k landmarks. -

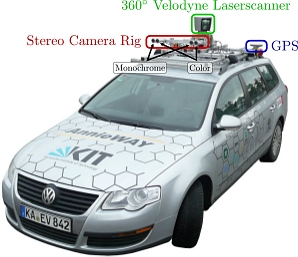

KITTI Vision Benchmark Suite

First released in 2012 by Geiger et al, the KITTI dataset was released with the intent of advancing autonomous driving research with a novel set of real-world computer vision benchmarks. One of the first ever autonomous driving datasets, KITTI boasts over 4000 academic citations and counting.

KITTI provides 2D, 3D, and bird’s eye view object detection datasets, 2D object and multi-object tracking and segmentation datasets, road/lane evaluation detection datasets, both pixel and instance-level semantic datasets, as well as raw datasets.

-

LeddarTech PixSet Dataset

Launched in 2021, Leddar PixSet is a new, publicly available dataset for autonomous driving research and development that contains data from a full AV sensor suite (cameras, LiDARs, radar, IMU), and includes full-waveform data from the Leddar Pixell, a 3D solid-state flash LiDAR sensor. The dataset contains 29k frames in 97 sequences, with more than 1.3M 3D boxes annotated -

Level 5 Open Data

Published by popular rideshare app Lyft, the Level5 dataset is another great source for autonomous driving data. It includes over 55,000 human-labeled 3D annotated frames, surface map, and an underlying HD spatial semantic map that is captured by 7 cameras and up to 3 LiDAR sensors that can be used to contextualize the data. -

nuScenes Dataset

Developed by Motional, the nuScenes dataset is one of the largest open-source datasets for autonomous driving. Recorded in Boston and Singapore using a full sensor suite (32-beam LiDAR, 6 360° cameras and radars), the dataset contains over 1.44 million camera images capturing a diverse range of traffic situations, driving maneuvers, and unexpected behaviors.

-

Oxford Radar RobotCar Dataset

The Oxford RobotCar Dataset contains over 100 recordings of a consistent route through Oxford, UK, captured over a period of over a year. The dataset captures many different environmental conditions, including weather, traffic and pedestrians, along with longer term changes such as construction and roadworks.

-

PandaSet

PandaSet was the first open-source AV dataset available for both academic and commercial use. It contains 48,000 camera images, 16,000 LiDAR sweeps, 28 annotation classes, and 37 semantic segmentation labels taken from a full sensor suite. -

Udacity Self Driving Car Dataset

Online education platform Udacity has open sourced access to a variety of projects for autonomous driving, including neural networks trained to predict steering angles of the car, camera mounts, and dozens of hours of real driving data.

-

Waymo Open Dataset

The Waymo Open dataset is an open-source multimodal sensor dataset for autonomous driving. Extracted from Waymo self-driving vehicles, the data covers a wide variety of driving scenarios and environments. It contains 1000 types of different segments where each segment captures 20 seconds of continuous driving, corresponding to 200,000 frames at 10 Hz per sensor.

KITTI

|

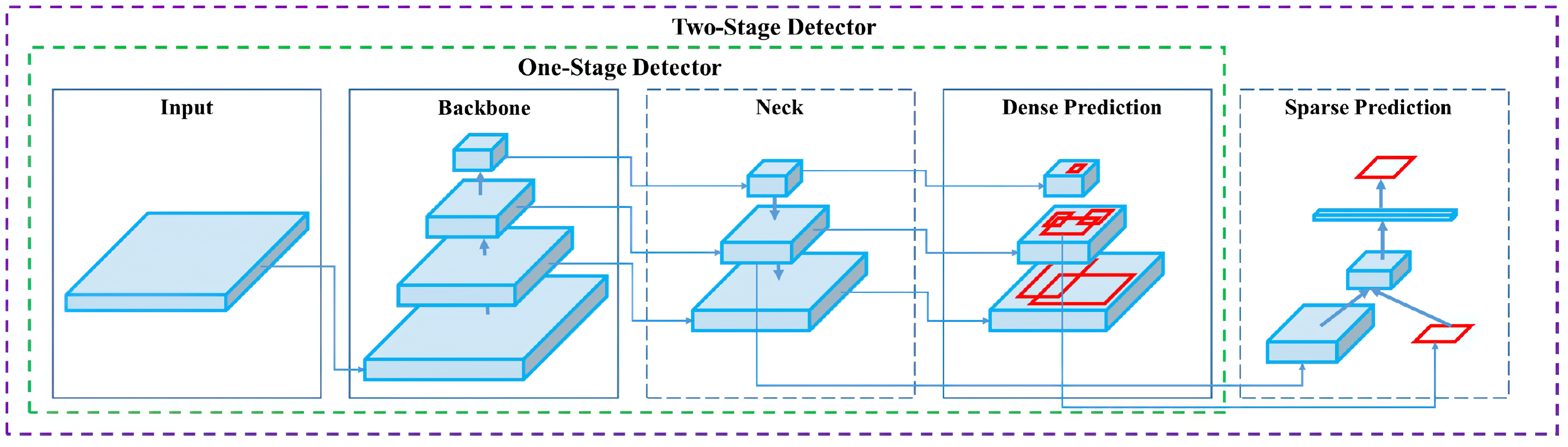

LiDAR-based 3D Object Detection Methods

YOLO3D

Paper: YOLO3D: End-to-end real-time 3D Oriented Object Bounding Box Detection from LiDAR Point Cloud

Code: maudzung/YOLO3D-YOLOv4-PyTorch

- Inputs: Bird-eye-view (BEV) maps that are encoded by height, intensity and density of 3D LiDAR point clouds.

- The input size: 608 x 608 x 3

- Outputs: 7 degrees of freedom (7-DOF) of objects: (

cx, cy, cz, l, w, h, θ)

YOLOv4 architecture

YOLO4D

Camera-based 3D Object Detection Methods

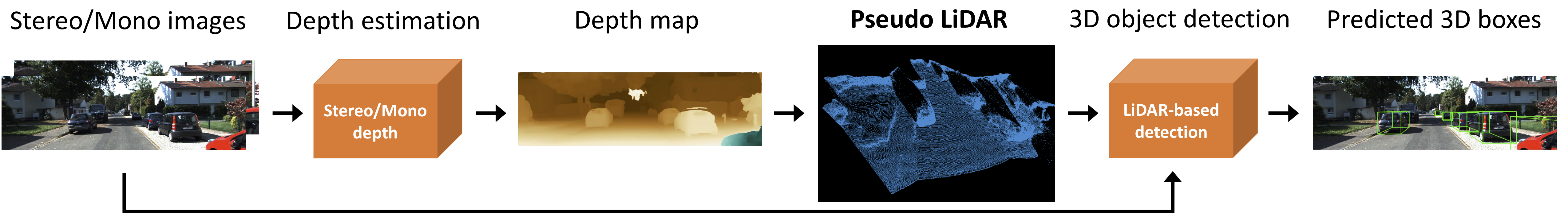

Pseudo-LiDAR

Paper: Pseudo-LiDAR from Visual Depth Estimation: Bridging the Gap in 3D Object Detection for Autonomous Driving

Code: mileyan/pseudo_lidar

Stereo R-CNN based 3D Object Detection

Paper: Stereo R-CNN based 3D Object Detection for Autonomous Driving

Code: HKUST-Aerial-Robotics/Stereo-RCNN

Monocular Camera-based Depth Estimation Methods

DF-Net

Paper: DF-Net: Unsupervised Joint Learning of Depth and Flow using Cross-Task Consistency

Code: vt-vl-lab/DF-Net

- Model in the paper uses 2-frame as input, while this code uses 5-frame as input (you might use any odd numbers of frames as input, though you would need to tune the hyper-parameters)

- FlowNet in the paper is pre-trained on SYNTHIA, while this one is pre-trained on Cityscapes

Depth Fusion Methods with LiDAR and Camera

DFineNet

Paper: DFineNet: Ego-Motion Estimation and Depth Refinement from Sparse, Noisy Depth Input with RGB Guidance

Code: vt-vl-lab/DF-Net

3D Object Detection Methods with LiDAR and Camera

PointFusion

Paper: PointFusion: Deep Sensor Fusion for 3D Bounding Box Estimation

Code: mialbro/PointFusion

MVX-Net

Paper: MVX-Net: Multimodal VoxelNet for 3D Object Detection

Code: mmdetection3d/mvxnet

DeepVO

Paper: DeepVO: Towards End-to-End Visual Odometry with Deep Recurrent Convolutional Neural Networks

Code: ChiWeiHsiao/DeepVO-pytorch

Pedestrain Behavior Prediction Methods

Predicting Future Person Activities and Locations in Videos

Paper: Peeking into the Future: Predicting Future Person Activities and Locations in Videos

|

|

Spectral Trajectory and Behavior Prediction

Papers: Forecasting Trajectory and Behavior of Road-Agents Using Spectral Clustering in Graph-LSTMs

Code: Xiejc97/Spectral-Trajectory-and-Behavior-Prediction

|

|

|

Vehicle Behavior Prediction Methods

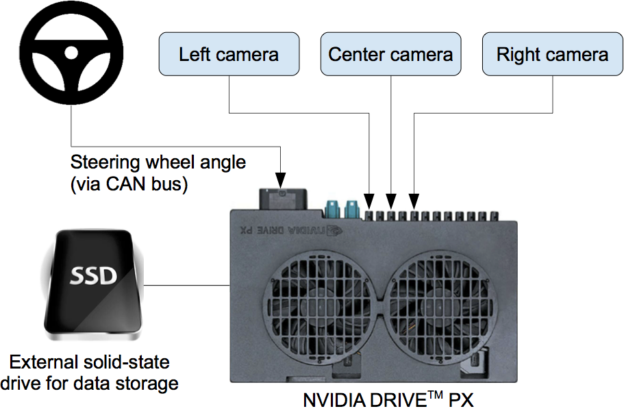

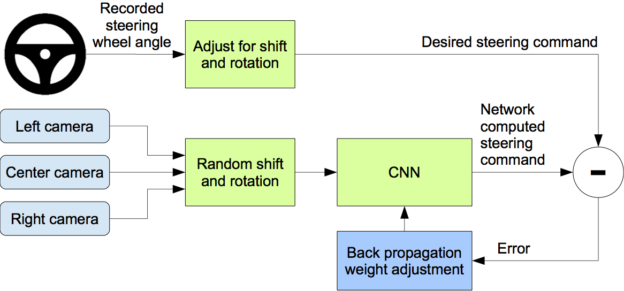

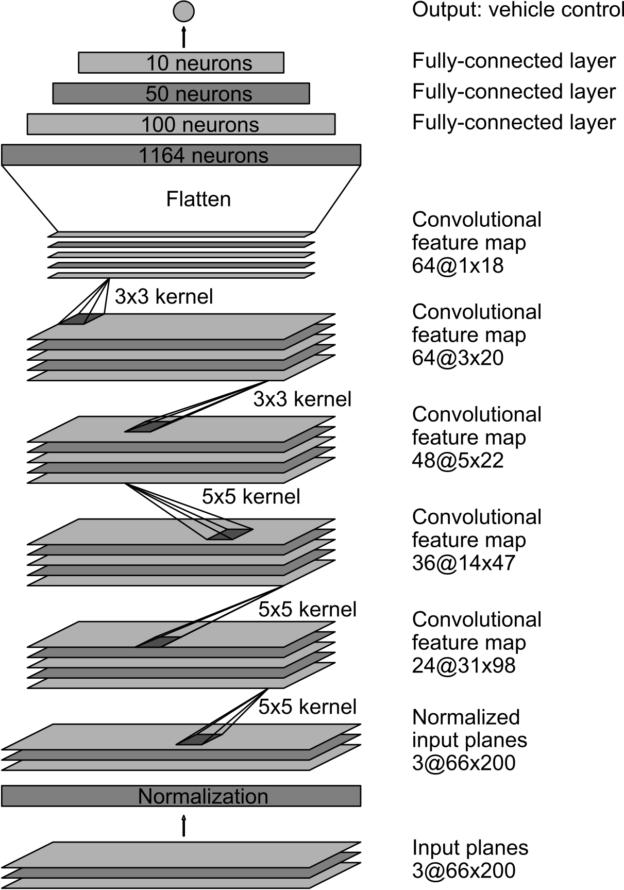

End-to-End Deep Learning for Self-Driving Cars

Blog: End-to-End Deep Learning for Self-Driving Cars

End-to-End Learning of Driving Models

Paper: End-to-End Learning of Driving Models with Surround-View Cameras and Route Planners

End-to-end Learning in Simulated Urban Environments

Paper: Autonomous Vehicle Control: End-to-end Learning in Simulated Urban Environments

NeuroTrajectory

ChauffeurNet (Waymo)

Paper: ChauffeurNet: Learning to Drive by Imitating the Best and Synthesizing the Worst

Code: aidriver/ChauffeurNet

With Stop Signs Rendered  |

No Stop Signs Rendered  |

With Perception Boxes Rendered  |

No Perception Boxes Rendered  |

Github: Iftimie/ChauffeurNet

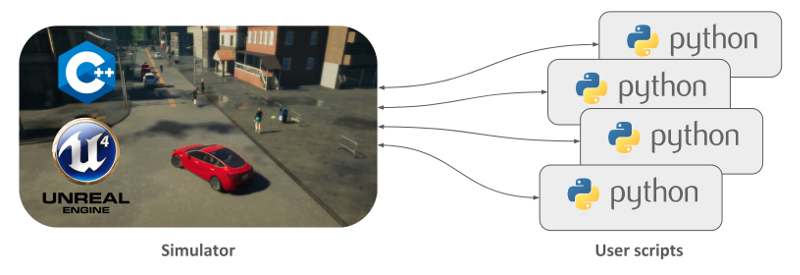

Car Simulators

CARLA

Paper: CARLA: An Open Urban Driving Simulator

- Traffic manager. A built-in system that takes control of the vehicles besides the one used for learning. It acts as a conductor provided by CARLA to recreate urban-like environments with realistic behaviours.

- Sensors. Vehicles rely on them to dispense information of their surroundings. In CARLA they are a specific kind of actor attached the vehicle and the data they receive can be retrieved and stored to ease the process. Currently the project supports different types of these, from cameras to radars, lidar and many more.

- Recorder. This feature is used to reenact a simulation step by step for every actor in the world. It grants access to any moment in the timeline anywhere in the world, making for a great tracing tool.

- ROS bridge and Autoware implementation. As a matter of universalization, the CARLA project ties knots and works for the integration of the simulator within other learning environments.

- Open assets. CARLA facilitates different maps for urban settings with control over weather conditions and a blueprint library with a wide set of actors to be used. However, these elements can be customized and new can be generated following simple guidelines. Scenario runner. In order to ease the learning process for vehicles,

AirSim

Cars in AirSim

Drones in AirSim

Install AirSim

Releases (.zip)

For Windows, the following environments are available:

- AbandonedPark

- Africa (uneven terrain and animated animals)

- AirSimNH (small urban neighborhood block)

- Blocks

- Building_99

- CityEnviron

- Coastline

- LandscapeMountains

- MSBuild2018 (soccer field)

- TrapCamera

- ZhangJiajie

For Linux, the following environments are available:

- AbandonedPark

- Africa (uneven terrain and animated animals)

- AirSimNH (small urban neighborhood block)

- Blocks

- Building_99

- LandscapeMountains

- MSBuild2018 (soccer field)

- TrapCamera

- ZhangJiajie

- Download & Unzip a environment (AirSimNH.zip)

- Find the exe file, click to run

Windows : AirSimNH/WindowsNoEditor/AirSimNH/Binaries/Win64/AirSimNH.exe

Linux : AirSimNH/LinuxNoEditor/AirSimNH/Binaries/Linux/AirSimNH

User Interface

- press F1 for hot-keys help

- press Arrow-keys or A-S-W-D to drive

- press Backspace to rest & restart

- press Alt-F4 to quit AirSim

- press 0 for sub-windows (shown below)

- Press REC button for recording Car/Drone info

recorded file path will be shown on screen

For Car,

VehicleName TimeStamp POS_X POS_Y POS_Z Q_W Q_X Q_Y Q_Z Throttle Steering Brake Gear Handbrake RPM Speed ImageFile

For Drone,

VehicleName TimeStamp POS_X POS_Y POS_Z Q_W Q_X Q_Y Q_Z ImageFile

Manual Drive

|

|

Reinforcement Learning in AirSim

The simulation needs to be up and running before you execute dqn_car.py!!!

-

Keep AirSim Env running first

cd AirSimNH/LinuxNoEditor

./AirSimNH.sh -ResX=640 -ResY=480 -windowed

(For Windows, click AirSimNH.exe to run, Alt-Enter will switch to windowed mode) -

To train DQN model for 500,000 eposides (RL with Car)

Open a Terminal on Linux / run Git-Bash on Windows

pip install gym

pip install stable-baselines3

cd ~

git clone https://github.com/rkuo2000/AirSim

cd ~/AirSim/PythonClient/reinforcement_learning

python dqn_car.py

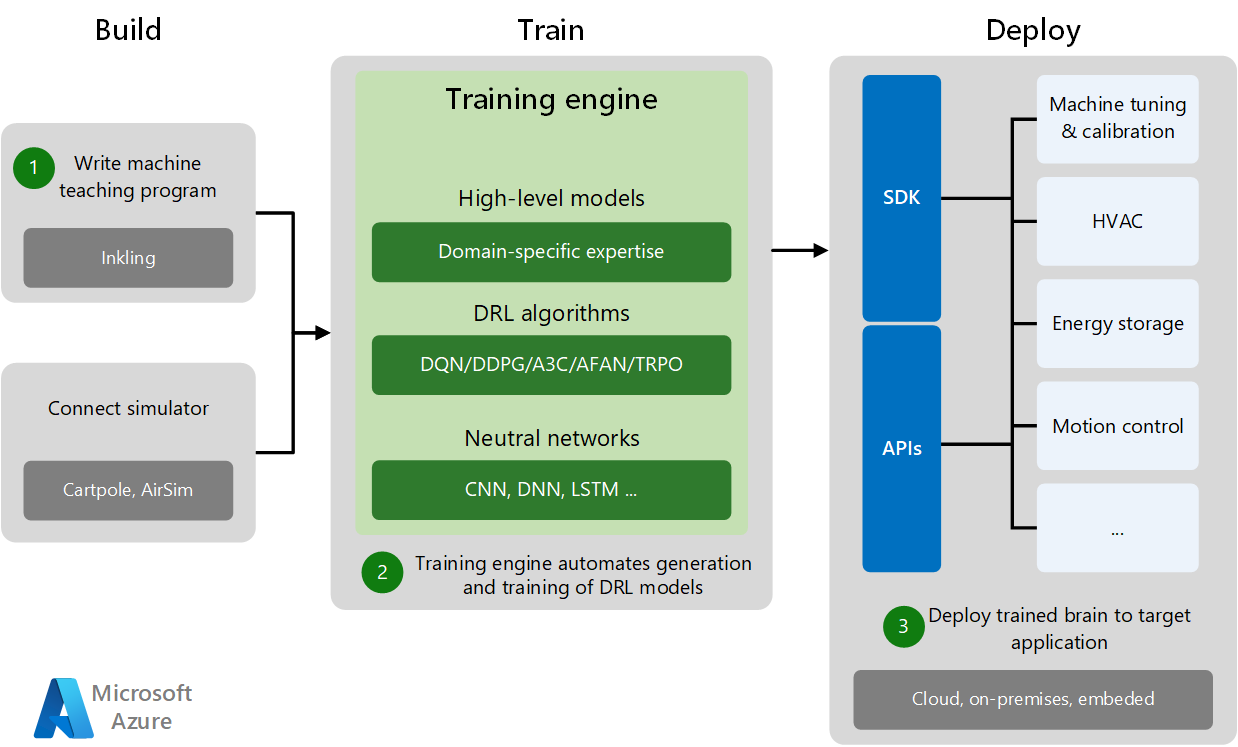

Autonomous Driving Cookbook

|

|

Currently, the following tutorials are available:

- Autonomous Driving using End-to-End Deep Learning: an AirSim tutorial

- Distributed Deep Reinforcement Learning for Autonomous Driving

Kaggle: AirSim End-to-End Learning

Build Model with input image and state (driving angle)

image_input_shape = sample_batch_train_data[0].shape[1:]

state_input_shape = sample_batch_train_data[1].shape[1:]

#Create the convolutional stacks

pic_input = layers.Input(shape=image_input_shape)

x = layers.Conv2D(16, (3, 3), activation='relu', padding='same')(pic_input)

x = layers.MaxPooling2D(pool_size=(2,2))(x)

x = layers.Conv2D(32, (3, 3), activation='relu', padding='same')(x)

x = layers.MaxPooling2D(pool_size=(2, 2))(x)

x = layers.Conv2D(32, (3, 3), activation='relu', padding='same')(x)

x = layers.MaxPooling2D(pool_size=(2, 2))(x)

x = layers.Flatten()(x)

x = layers.Dropout(0.2)(x)

#Inject the state input

state_input = layers.Input(shape=state_input_shape)

m = layers.concatenate([x, state_input])

# Add a few dense layers to finish the model

m = layers.Dense(64, activation='relu')(m)

m = layers.Dropout(0.2)(m)

m = layers.Dense(10, activation='relu')(m)

m = layers.Dropout(0.2)(m)

m = layers.Dense(1)(m)

model = models.Model(inputs=[pic_input, state_input], outputs=m)

model.summary()

Test Result

Azure

Road Tests

Tesla Self-Driving

Cruise

Waymo

DeepRoute.ai (元戎启行)

Xiaomi Self-Driving

Self-Driving 2.0

- Drive with instance segmentation model

- Depth Estimation

- RNN

This site was last updated December 22, 2022.